Install and Configure an Ansible Control Node

This objective is made up of the following items:

- Install required packages.

- Create a static host inventory file (covered in other lessons but also shown again).

- Create a configuration file (covered in other lessons, and not shown in this lesson).

- Configure privilege escalation on managed nodes.

- Validate a working configuration using ad-hoc Ansible commands.

- We will create a user for Ansible called ansible.

- User on managed nodes will need to have passwordless sudo setup.

- User ansible will need to be able to ssh into nodes without needing password.

Ansible on CentOS requires epel-relase. Let’s install required packages:

|

1 2 |

[root@controlnode /]# yum install -y epel-release [root@controlnode /]# yum -y install ansible |

Creating ansible user:

|

1 2 |

[root@controlnode /]# useradd ansible [root@controlnode /]# passwd ansible |

Allow ansible user to run any command::

|

1 2 3 4 5 6 |

[root@controlnode /]# visudo ## The COMMANDS section may have other options added to it. ## ## Allow root to run any commands anywhere root ALL=(ALL) ALL ansible ALL=(ALL) NOPASSWD: ALL |

Editing hosts file:

|

1 2 3 4 5 6 |

[root@controlnode /]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 172.30.9.55 controlnode controlnode.example.com 172.30.9.56 managedhost1 managedhost1.example.com 172.30.9.57 managedhost2 managedhost2..example.com |

Editing inventory file:

|

1 2 3 4 5 6 7 8 9 |

[root@controlnode /]# vi /etc/ansible/hosts # Here's another example of host ranges, this time there are no # leading 0s: ## db-[99:101]-node.example.com localhost managedhost1 managedhost2 |

Checking connectivity to managedhost1:

|

1 2 3 4 5 6 7 8 9 |

[root@controlnode /]# ping managedhost1 PING managedhost1 (172.30.9.56) 56(84) bytes of data. 64 bytes from managedhost1 (172.30.9.56): icmp_seq=1 ttl=64 time=0.458 ms 64 bytes from managedhost1 (172.30.9.56): icmp_seq=2 ttl=64 time=0.340 ms 64 bytes from managedhost1 (172.30.9.56): icmp_seq=3 ttl=64 time=0.344 ms ^C --- managedhost1 ping statistics --- 3 packets transmitted, 3 received, 0% packet loss, time 1999ms rtt min/avg/max/mdev = 0.340/0.380/0.458/0.059 ms |

Connecting to managedhost1:

|

1 2 3 4 5 6 7 8 |

[root@controlnode /]# ssh managedhost1 The authenticity of host 'managedhost1 (172.30.9.56)' can't be established. ECDSA key fingerprint is d8:44:a4:a2:a8:b4:c9:54:22:19:69:42:2c:07:a6:35. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'managedhost1,172.30.9.56' (ECDSA) to the list of known hosts. root@managedhost1's password: Last login: Mon May 25 15:36:10 2020 [root@managedhost1 ~]# |

Creating ansible user on managedhost1:

|

1 2 3 4 5 6 7 |

[root@managedhost1 ~]# useradd ansible [root@managedhost1 ~]# passwd ansible Zmienianie hasła użytkownika ansible. Nowe hasło : BŁĘDNE HASŁO: Hasło jest krótsze niż 8 znaków Proszę ponownie podać nowe hasło : passwd: zaktualizowanie wszystkich tokenów uwierzytelniania się powiodło. |

Allowing ansible user to run any command on managedhost1:

|

1 2 3 4 5 6 7 8 |

[root@managedhost1 ~]# visudo ## The COMMANDS section may have other options added to it. ## ## Allow root to run any commands anywhere root ALL=(ALL) ALL ansible ALL=(ALL) NOPASSWD: ALL |

Logging out from managedhost1:

|

1 2 3 |

[root@managedhost1 ~]# exit logout Connection to managedhost1 closed. |

Doing the same on managedhost2:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 |

[root@controlnode /]# ping managedhost2 PING managedhost2 (172.30.9.57) 56(84) bytes of data. 64 bytes from managedhost2 (172.30.9.57): icmp_seq=1 ttl=64 time=0.456 ms 64 bytes from managedhost2 (172.30.9.57): icmp_seq=2 ttl=64 time=0.388 ms 64 bytes from managedhost2 (172.30.9.57): icmp_seq=3 ttl=64 time=0.198 ms ^C --- managedhost2 ping statistics --- 3 packets transmitted, 3 received, 0% packet loss, time 2000ms rtt min/avg/max/mdev = 0.198/0.347/0.456/0.110 ms [root@controlnode /]# ssh managedhost2 The authenticity of host 'managedhost2 (172.30.9.57)' can't be established. ECDSA key fingerprint is d8:44:a4:a2:a8:b4:c9:54:22:19:69:42:2c:07:a6:35. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'managedhost2,172.30.9.57' (ECDSA) to the list of known hosts. root@managedhost2's password: Last login: Mon May 25 15:45:49 2020 [root@managedhost2 ~]# useradd ansible [root@managedhost2 ~]# passwd ansible Zmienianie hasła użytkownika ansible. Nowe hasło : BŁĘDNE HASŁO: Hasło jest krótsze niż 8 znaków Proszę ponownie podać nowe hasło : passwd: zaktualizowanie wszystkich tokenów uwierzytelniania się powiodło. [root@managedhost2 ~]# visudo The COMMANDS section may have other options added to it. ## ## Allow root to run any commands anywhere root ALL=(ALL) ALL ansible ALL=(ALL) NOPASSWD: ALL [root@managedhost2 ~]# exit logout Connection to managedhost2 closed. |

On controlnode switch to ansible user:

|

1 2 |

[root@controlnode /]# su - ansible [ansible@controlnode ~]$ |

Create SSH key:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

[ansible@controlnode ~]$ ssh-keygen Generating public/private rsa key pair. Enter file in which to save the key (/home/ansible/.ssh/id_rsa): Created directory '/home/ansible/.ssh'. Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/ansible/.ssh/id_rsa. Your public key has been saved in /home/ansible/.ssh/id_rsa.pub. The key fingerprint is: e4:5b:eb:13:ca:b6:3a:be:a7:c9:48:b5:79:63:44:9b ansible@controlnode.example.com The key's randomart image is: +--[ RSA 2048]----+ | | | | | o | | + o | | . E . | | . + o.. | | . o.=... | | . o.+=o. | | ..BB.... | +-----------------+ |

Copy key to localhost and managedhosts:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 |

[ansible@controlnode ~]$ ssh-copy-id localhost The authenticity of host 'localhost (::1)' can't be established. ECDSA key fingerprint is d8:44:a4:a2:a8:b4:c9:54:22:19:69:42:2c:07:a6:35. Are you sure you want to continue connecting (yes/no)? yes /bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys ansible@localhost's password: Number of key(s) added: 1 Now try logging into the machine, with: "ssh 'localhost'" and check to make sure that only the key(s) you wanted were added. [ansible@controlnode ~]$ ssh-copy-id managedhost1 The authenticity of host 'managedhost1 (172.30.9.56)' can't be established. ECDSA key fingerprint is d8:44:a4:a2:a8:b4:c9:54:22:19:69:42:2c:07:a6:35. Are you sure you want to continue connecting (yes/no)? yes /bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys ansible@managedhost1's password: Permission denied, please try again. ansible@managedhost1's password: Number of key(s) added: 1 Now try logging into the machine, with: "ssh 'managedhost1'" and check to make sure that only the key(s) you wanted were added. [ansible@controlnode ~]$ ssh-copy-id managedhost2 The authenticity of host 'managedhost2 (172.30.9.57)' can't be established. ECDSA key fingerprint is d8:44:a4:a2:a8:b4:c9:54:22:19:69:42:2c:07:a6:35. Are you sure you want to continue connecting (yes/no)? yes /bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys ansible@managedhost2's password: Number of key(s) added: 1 Now try logging into the machine, with: "ssh 'managedhost2'" and check to make sure that only the key(s) you wanted were added. |

Checking SSH conectivity:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

[ansible@controlnode ~]$ ssh localhost Last login: Mon May 25 17:09:44 2020 [ansible@controlnode ~]$ logout Connection to localhost closed. [ansible@controlnode ~]$ ssh managedhost1 Last login: Mon May 25 17:09:56 2020 from controlnode [ansible@managedhost1 ~]$ logout Connection to managedhost1 closed. [ansible@controlnode ~]$ ssh managedhost2 [ansible@managedhost2 ~]$ logout Connection to managedhost2 closed. |

Let’s try if we have ansible connection with managedhosts:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

[ansible@controlnode ~]$ ansible all -m ping localhost | SUCCESS => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "ping": "pong" } managedhost1 | SUCCESS => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "ping": "pong" } managedhost2 | SUCCESS => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "ping": "pong" } |

The same with -b option (becoming a root user):

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

[ansible@controlnode ~]$ ansible all -b -m ping managedhost1 | SUCCESS => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "ping": "pong" } managedhost2 | SUCCESS => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "ping": "pong" } localhost | SUCCESS => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "ping": "pong" } |

Simple Shell Scripts for Running Ad-Hoc Commands

Why shell scripts?

- Shell scripts can be used to hide complexity.

- You can use shell scripts easily with Ansible ad-hoc commands.

- People not experienced in Ansible can leverage them.

- People not skilled in Ansible can create and use them.

- There is no need to know YAML and .yml formatting.

It is a simple script which installs package given as argument on managedhosts:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

[ansible@controlnode ~]$ pwd /home/ansible [ansible@controlnode ~]$ mkdir scripts [ansible@controlnode ~]$ cd scripts [ansible@controlnode scripts]$ vi install-package.sh [ansible@controlnode scripts]$ chmod +x install-package.sh [ansible@controlnode scripts]$ cat install-package.sh #!/bin/bash # Note cmd line variable is $1 if [ -n "$1" ]; then echo "Package to install is $1" ansible all -b -m yum -a "name=$1 state=present" else echo "Package to install was not supplied exit" fi |

Let’s install elinks on managedhosts using the script:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 |

[ansible@controlnode scripts]$ ./install-package.sh elinks Package to install is elinks managedhost2 | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "changes": { "installed": [ "elinks" ] }, "msg": "", "rc": 0, "results": [ "Loaded plugins: fastestmirror\nLoading mirror speeds from cached hostfile\n * base: centos.slaskdatacenter.com\n * extras: centos.slaskdatacenter.com\n * updates: centos.slaskdatacenter.com\nResolving Dependencies\n--> Running transaction check\n---> Package elinks.x86_64 0:0.12-0.37.pre6.el7.0.1 will be installed\n--> Processing Dependency: libnss_compat_ossl.so.0()(64bit) for package: elinks-0.12-0.37.pre6.el7.0.1.x86_64\n--> Processing Dependency: libmozjs185.so.1.0()(64bit) for package: elinks-0.12-0.37.pre6.el7.0.1.x86_64\n--> Running transaction check\n---> Package js.x86_64 1:1.8.5-20.el7 will be installed\n---> Package nss_compat_ossl.x86_64 0:0.9.6-8.el7 will be installed\n--> Finished Dependency Resolution\n\nDependencies Resolved\n\n================================================================================\n Package Arch Version Repository\n Size\n================================================================================\nInstalling:\n elinks x86_64 0.12-0.37.pre6.el7.0.1 base 882 k\nInstalling for dependencies:\n js x86_64 1:1.8.5-20.el7 base 2.3 M\n nss_compat_ossl x86_64 0.9.6-8.el7 base 37 k\n\nTransaction Summary\n================================================================================\nInstall 1 Package (+2 Dependent packages)\n\nTotal download size: 3.2 M\nInstalled size: 9.6 M\nDownloading packages:\n--------------------------------------------------------------------------------\nTotal 12 MB/s | 3.2 MB 00:00 \nRunning transaction check\nRunning transaction test\nTransaction test succeeded\nRunning transaction\n Installing : nss_compat_ossl-0.9.6-8.el7.x86_64 1/3 \n Installing : 1:js-1.8.5-20.el7.x86_64 2/3 \n Installing : elinks-0.12-0.37.pre6.el7.0.1.x86_64 3/3 \n Verifying : elinks-0.12-0.37.pre6.el7.0.1.x86_64 1/3 \n Verifying : 1:js-1.8.5-20.el7.x86_64 2/3 \n Verifying : nss_compat_ossl-0.9.6-8.el7.x86_64 3/3 \n\nInstalled:\n elinks.x86_64 0:0.12-0.37.pre6.el7.0.1 \n\nDependency Installed:\n js.x86_64 1:1.8.5-20.el7 nss_compat_ossl.x86_64 0:0.9.6-8.el7 \n\nComplete!\n" ] } managedhost1 | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "changes": { "installed": [ "elinks" ] }, "msg": "", "rc": 0, "results": [ "Loaded plugins: fastestmirror\nLoading mirror speeds from cached hostfile\n * base: centos2.hti.pl\n * extras: centos1.hti.pl\n * updates: centos1.hti.pl\nResolving Dependencies\n--> Running transaction check\n---> Package elinks.x86_64 0:0.12-0.37.pre6.el7.0.1 will be installed\n--> Processing Dependency: libnss_compat_ossl.so.0()(64bit) for package: elinks-0.12-0.37.pre6.el7.0.1.x86_64\n--> Processing Dependency: libmozjs185.so.1.0()(64bit) for package: elinks-0.12-0.37.pre6.el7.0.1.x86_64\n--> Running transaction check\n---> Package js.x86_64 1:1.8.5-20.el7 will be installed\n---> Package nss_compat_ossl.x86_64 0:0.9.6-8.el7 will be installed\n--> Finished Dependency Resolution\n\nDependencies Resolved\n\n================================================================================\n Package Arch Version Repository\n Size\n================================================================================\nInstalling:\n elinks x86_64 0.12-0.37.pre6.el7.0.1 base 882 k\nInstalling for dependencies:\n js x86_64 1:1.8.5-20.el7 base 2.3 M\n nss_compat_ossl x86_64 0.9.6-8.el7 base 37 k\n\nTransaction Summary\n================================================================================\nInstall 1 Package (+2 Dependent packages)\n\nTotal download size: 3.2 M\nInstalled size: 9.6 M\nDownloading packages:\n--------------------------------------------------------------------------------\nTotal 538 kB/s | 3.2 MB 00:06 \nRunning transaction check\nRunning transaction test\nTransaction test succeeded\nRunning transaction\n Installing : nss_compat_ossl-0.9.6-8.el7.x86_64 1/3 \n Installing : 1:js-1.8.5-20.el7.x86_64 2/3 \n Installing : elinks-0.12-0.37.pre6.el7.0.1.x86_64 3/3 \n Verifying : elinks-0.12-0.37.pre6.el7.0.1.x86_64 1/3 \n Verifying : 1:js-1.8.5-20.el7.x86_64 2/3 \n Verifying : nss_compat_ossl-0.9.6-8.el7.x86_64 3/3 \n\nInstalled:\n elinks.x86_64 0:0.12-0.37.pre6.el7.0.1 \n\nDependency Installed:\n js.x86_64 1:1.8.5-20.el7 nss_compat_ossl.x86_64 0:0.9.6-8.el7 \n\nComplete!\n" ] } localhost | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "changes": { "installed": [ "elinks" ] }, "msg": "", "rc": 0, "results": [ "Loaded plugins: fastestmirror\nLoading mirror speeds from cached hostfile\n * base: centos.slaskdatacenter.com\n * epel: mirror.vpsnet.com\n * extras: centos.slaskdatacenter.com\n * updates: centos.slaskdatacenter.com\nResolving Dependencies\n--> Running transaction check\n---> Package elinks.x86_64 0:0.12-0.37.pre6.el7.0.1 will be installed\n--> Processing Dependency: libnss_compat_ossl.so.0()(64bit) for package: elinks-0.12-0.37.pre6.el7.0.1.x86_64\n--> Processing Dependency: libmozjs185.so.1.0()(64bit) for package: elinks-0.12-0.37.pre6.el7.0.1.x86_64\n--> Running transaction check\n---> Package js.x86_64 1:1.8.5-20.el7 will be installed\n---> Package nss_compat_ossl.x86_64 0:0.9.6-8.el7 will be installed\n--> Finished Dependency Resolution\n\nDependencies Resolved\n\n================================================================================\n Package Arch Version Repository\n Size\n================================================================================\nInstalling:\n elinks x86_64 0.12-0.37.pre6.el7.0.1 base 882 k\nInstalling for dependencies:\n js x86_64 1:1.8.5-20.el7 base 2.3 M\n nss_compat_ossl x86_64 0.9.6-8.el7 base 37 k\n\nTransaction Summary\n================================================================================\nInstall 1 Package (+2 Dependent packages)\n\nTotal download size: 3.2 M\nInstalled size: 9.6 M\nDownloading packages:\n--------------------------------------------------------------------------------\nTotal 568 kB/s | 3.2 MB 00:05 \nRunning transaction check\nRunning transaction test\nTransaction test succeeded\nRunning transaction\n Installing : nss_compat_ossl-0.9.6-8.el7.x86_64 1/3 \n Installing : 1:js-1.8.5-20.el7.x86_64 2/3 \n Installing : elinks-0.12-0.37.pre6.el7.0.1.x86_64 3/3 \n Verifying : elinks-0.12-0.37.pre6.el7.0.1.x86_64 1/3 \n Verifying : 1:js-1.8.5-20.el7.x86_64 2/3 \n Verifying : nss_compat_ossl-0.9.6-8.el7.x86_64 3/3 \n\nInstalled:\n elinks.x86_64 0:0.12-0.37.pre6.el7.0.1 \n\nDependency Installed:\n js.x86_64 1:1.8.5-20.el7 nss_compat_ossl.x86_64 0:0.9.6-8.el7 \n\nComplete!\n" ] } |

Ansible and Firewall Rules

• There are Ansible modules that can be used with firewalls.

• The firewalld module like others, can be used to add or remove rules.

• Here is the URL for more information: https://docs.ansible.com/ansible/latest/modules/firewalld_module.html

• There is a module for iptables

https://docs.ansible.com/ansible/latest/modules/iptables_module.html

The simple playbook which install and enable firewalld service::

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

[ansible@controlnode scripts]$ cat install-firewalld.yml --- - hosts: all user: ansible become: yes gather_facts: no tasks: - name: install firewalld action: yum name=firewalld state=installed - name: Enable firewalld on system reboot service: name=firewalld enabled=yes - name: Start service firewalld, if not started service: name: firewalld state: started |

Check the syntax of playbook:

|

1 2 3 |

[ansible@controlnode scripts]$ ansible-playbook --syntax-check install-firewalld.yml playbook: install-firewalld.yml |

Run the playbook:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

[ansible@controlnode scripts]$ ansible-playbook install-firewalld.yml PLAY [all] ************************************************************************************************************************************ TASK [install firewalld] ********************************************************************************************************************** ok: [managedhost1] ok: [managedhost2] ok: [localhost] TASK [Enable firewalld on system reboot] ****************************************************************************************************** ok: [managedhost2] ok: [managedhost1] ok: [localhost] TASK [Start service firewalld, if not started] ************************************************************************************************ ok: [managedhost1] ok: [managedhost2] ok: [localhost] PLAY RECAP ************************************************************************************************************************************ localhost : ok=3 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 managedhost1 : ok=3 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 managedhost2 : ok=3 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 |

Playbook setup-server.yml install elinks and apache. Make http service enabled.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

[ansible@controlnode scripts]$ cat setup-server.yml --- - hosts: all user: ansible become: yes gather_facts: no tasks: - name: install elinks action: yum name=elinks state=installed - name: install httpd action: yum name=httpd state=installed - name: Enable & start Apache on system reboot service: name=httpd enabled=yes state=started [ansible@controlnode scripts]$ ansible-playbook --syntax-check setup-server.yml playbook: setup-server.yml |

Run the playbook:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

[ansible@controlnode scripts]$ ansible-playbook setup-server.yml PLAY [all] ************************************************************************************************************************************ TASK [install elinks] ************************************************************************************************************************* ok: [managedhost1] ok: [localhost] ok: [managedhost2] TASK [install httpd] ************************************************************************************************************************** changed: [managedhost2] changed: [managedhost1] changed: [localhost] TASK [Enable & start Apache on system reboot] ************************************************************************************************* changed: [managedhost1] changed: [managedhost2] changed: [localhost] PLAY RECAP ************************************************************************************************************************************ localhost : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 managedhost1 : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 managedhost2 : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 |

At this moment we can’t connect to http service:

|

1 2 |

[ansible@controlnode scripts]$ curl managedhost1 curl: (7) Failed connect to managedhost1:80; Brak trasy do hosta |

So let’s create a firewall-rule.yml playbook:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

[ansible@controlnode scripts]$ cat firewall-rule.yml --- - hosts: all user: ansible become: yes gather_facts: no tasks: - firewalld: service: http permanent: yes state: enabled - name: Restart service firewalld service: name: firewalld state: restarted |

Check a syntax and run:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

[ansible@controlnode scripts]$ ansible-playbook --syntax-check firewall-rule.yml playbook: firewall-rule.yml [ansible@controlnode scripts]$ ansible-playbook firewall-rule.yml PLAY [all] ************************************************************************************************************************************ TASK [firewalld] ****************************************************************************************************************************** changed: [managedhost2] changed: [managedhost1] changed: [localhost] TASK [Restart service firewalld] ************************************************************************************************************** changed: [managedhost1] changed: [managedhost2] changed: [localhost] PLAY RECAP ************************************************************************************************************************************ localhost : ok=2 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 managedhost1 : ok=2 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 managedhost2 : ok=2 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 |

Now we can connect to apache service from controlnode to managedhost1:

|

1 2 3 4 5 |

[ansible@controlnode scripts]$ curl managedhost1 <!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.1//EN" "http://www.w3.org/TR/xhtml11/DTD/xhtml11.dtd"><html><head> <meta http-equiv="content-type" content="text/html; charset=UTF-8"> <title>Apache HTTP Server Test Page powered by CentOS</title> <meta http-equiv="Content-Type" content="text/html; charset=UTF-8"> |

Also we can connect from managedhost1 to controlnode:

|

1 2 3 4 5 6 7 |

[ansible@controlnode scripts]$ ssh managedhost1 Last login: Mon May 25 18:20:07 2020 from controlnode [ansible@managedhost1 ~]$ curl controlnode <!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.1//EN" "http://www.w3.org/TR/xhtml11/DTD/xhtml11.dtd"><html><head> <meta http-equiv="content-type" content="text/html; charset=UTF-8"> <title>Apache HTTP Server Test Page powered by CentOS</title> <meta http-equiv="Content-Type" content="text/html; charset=UTF-8"> |

Archiving

The Archive Module

- There are Ansible modules that can be used for archive purposes.

- It is mentioned as part of the exam’s EX407 objectives.

- Archive Module Documentation page: https://docs.ansible.com/ansible/latest/modules/archive_module.html

- Assumes the compression source exists on the target.

- Does not copy source files from the local system to the target before archiving.

- You can delete source files after archiving by using the remove=true option.

The Unarchive Module

- The opposite module is unarchive

- Unarchive Module Documentation page: https://docs.ansible.com/ansible/latest/modules/unarchive_module.html

- If checksum is required, then use get_url or uri instead.

- By default it will copy from the source file to the target before unpacking.

Example of playbook with archive module:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

[ansible@controlnode scripts]$ cat backup-logs.yml --- - hosts: all user: ansible become: yes gather_facts: no tasks: - name: Compress directory /var/log/ into /home/ansible/logs.zip archive: path: /var/log dest: /home/ansible/logs.tar.gz owner: ansible group: ansible format: gz |

Check and run the playbook:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

[ansible@controlnode scripts]$ ansible-playbook --syntax-check backup-logs.yml playbook: backup-logs.yml [ansible@controlnode scripts]$ ansible-playbook backup-logs.yml PLAY [all] ************************************************************************************************************************************ TASK [Compress directory /var/log/ into /home/ansible/logs.zip] ******************************************************************************* changed: [managedhost1] changed: [managedhost2] changed: [localhost] PLAY RECAP ************************************************************************************************************************************ localhost : ok=1 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 managedhost1 : ok=1 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 managedhost2 : ok=1 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 |

Now we can check if the playbook create archives on managed hosts:

|

1 2 3 4 5 6 7 8 9 10 |

[ansible@controlnode scripts]$ ll /home/ansible/logs.tar.gz -rw-r--r--. 1 ansible ansible 440837 05-25 18:37 /home/ansible/logs.tar.gz [ansible@controlnode scripts]$ ansible all -a "/bin/ls -l /home/ansible/logs.tar.gz" managedhost2 | CHANGED | rc=0 >> -rw-r--r--. 1 ansible ansible 401244 05-25 18:37 /home/ansible/logs.tar.gz managedhost1 | CHANGED | rc=0 >> -rw-r--r--. 1 ansible ansible 421266 05-25 18:37 /home/ansible/logs.tar.gz localhost | CHANGED | rc=0 >> -rw-r--r--. 1 ansible ansible 440837 05-25 18:37 /home/ansible/logs.tar.gz |

Now let’s use the fetch module:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

[ansible@controlnode scripts]$ cat backup-logs2.yml --- - hosts: all user: ansible become: yes gather_facts: no tasks: - name: Compress directory /var/log/ into /home/ansible/logs.zip archive: path: /var/log dest: /home/ansible/logs.tar.gz owner: ansible group: ansible format: gz - name: Fetch the log files to the local filesystem fetch: src: /home/ansible/logs.tar.gz dest: logbackup-{{ inventory_hostname }}.tar.gz flat: yes |

Check and run the playbook:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

[ansible@controlnode scripts]$ ansible-playbook --syntax-check backup-logs2.yml playbook: backup-logs2.yml [ansible@controlnode scripts]$ ansible-playbook backup-logs2.yml PLAY [all] ************************************************************************************************************************************ TASK [Compress directory /var/log/ into /home/ansible/logs.zip] ******************************************************************************* changed: [managedhost1] changed: [managedhost2] changed: [localhost] TASK [Fetch the log files to the local filesystem] ******************************************************************************************** changed: [managedhost1] changed: [managedhost2] changed: [localhost] PLAY RECAP ************************************************************************************************************************************ localhost : ok=2 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 managedhost1 : ok=2 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 managedhost2 : ok=2 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 |

Check if the playbook really fetch the backups annd store them locally:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 |

[ansible@controlnode scripts]$ ll logbackup* -rw-rw-r--. 1 ansible ansible 444665 05-25 18:56 logbackup-localhost.tar.gz -rw-rw-r--. 1 ansible ansible 424901 05-25 18:56 logbackup-managedhost1.tar.gz -rw-rw-r--. 1 ansible ansible 404496 05-25 18:56 logbackup-managedhost2.tar.gz [ansible@controlnode scripts]$ tar -xvf logbackup-managedhost1.tar.gz log/ppp/ log/tuned/ log/audit/ log/chrony/ log/anaconda/ log/rhsm/ log/httpd/ log/lastlog log/wtmp log/btmp log/messages log/secure log/maillog log/spooler log/vmware-vmsvc.log log/firewalld log/cron log/dmesg.old log/yum.log log/boot.log log/dmesg log/tuned/tuned.log log/audit/audit.log log/anaconda/anaconda.log log/anaconda/syslog log/anaconda/X.log log/anaconda/program.log log/anaconda/packaging.log log/anaconda/storage.log log/anaconda/ifcfg.log log/anaconda/ks-script-dEorE0.log log/anaconda/journal.log log/httpd/error_log log/httpd/access_log |

Cron

The Cron Module

- The cron module is used to manage crontab on your nodes,

- You can create environment variables as well as named crontab entries.

- You can add, update, and delete entries.

- You should add a name with th crontab entry so it can be removed easily with a playbook.

- e.g. name: “Job 0001”

- When managing environment variables, no comment line gets added; however, the module uses the name parameter to find the correct definition line.

Extra Parameters

- You remove the crontab entry by using

state: absentin a playbook. - The

name:gets matched for a removal. - You can use the boolean

disabledto comment out an entry (only works ifstate=present. - Jobs can be set to run at reboot if required. Use th

special_time: rebootif that is required. - You can add a specific user if you need to set crontab entry for a user (need to become root).

- Use

insertafterorinsertbeforeto add env entry before or after another env entry.

The example playbook :

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

[ansible@controlnode scripts]$ cat cron.yml --- - hosts: all user: ansible become: yes gather_facts: no tasks: - name: Ensure a job that runs at 5am and 5pm exists. Creates an entry like "0 5,17 * * * df -h » /tmp/diskspace" cron: name: "Job 0001" minute: "0" hour: "5,17" job: "df -h » /tmp/diskspace" |

Check and run the playbook:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

[ansible@controlnode scripts]$ ansible-playbook --syntax-check cron.yml playbook: cron.yml [ansible@controlnode scripts]$ ansible-playbook cron.yml PLAY [all] ************************************************************************************************************************************ TASK [Ensure a job that runs at 5am and 5pm exists. Creates an entry like "0 5,17 * * * df -h » /tmp/diskspace"] ****************************** changed: [localhost] changed: [managedhost1] changed: [managedhost2] PLAY RECAP ************************************************************************************************************************************ localhost : ok=1 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 managedhost1 : ok=1 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 managedhost2 : ok=1 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 |

Check if playbook works. We need list crontab as root so use sudo :

|

1 2 3 |

[ansible@controlnode scripts]$ sudo crontab -l #Ansible: Job 0001 0 5,17 * * * df -h » /tmp/diskspace |

Now we modify playbook:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

[ansible@controlnode scripts]$ cat cron.yml --- - hosts: all user: ansible become: yes gather_facts: no tasks: - name: Ensure a job that runs at 5am and 5pm exists. Creates an entry like "0 5,17 * * * df -h » /tmp/diskspace" cron: name: "Job 0001" minute: "0" hour: "5,17" job: "df -h » /tmp/diskspace" - name: Creates an entry like "PATH=/opt/bin" on top of crontab cron: name: PATH env: yes job: /opt/bin - name: Creates an entry like "APP_HOME=/srv/app" and insert it after PATH declaration cron: name: APP_HOME env: yes job: /srv/app insertbefore: PATH |

Chec and run:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

[ansible@controlnode scripts]$ ansible-playbook cron2.yml PLAY [all] ************************************************************************************************************************************ TASK [Ensure a job that runs at 5am and 5pm exists. Creates an entry like "0 5,17 * * * df -h » /tmp/diskspace"] ****************************** ok: [managedhost2] ok: [managedhost1] ok: [localhost] TASK [Creates an entry like "PATH=/opt/bin" on top of crontab] ******************************************************************************** ok: [managedhost2] ok: [managedhost1] ok: [localhost] TASK [Creates an entry like "APP_HOME=/srv/app" and insert it after PATH declaration] ********************************************************* changed: [managedhost1] changed: [managedhost2] changed: [localhost] PLAY RECAP ************************************************************************************************************************************ localhost : ok=3 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 managedhost1 : ok=3 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 managedhost2 : ok=3 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 |

And check what new playbook has done:

|

1 2 3 4 5 |

[ansible@controlnode scripts]$ sudo crontab -l APP_HOME="/srv/app" PATH="/opt/bin" #Ansible: Job 0001 0 5,17 * * * df -h » /tmp/diskspace |

It added environment variables APP_HOME and PATH. APP_HOME has been added to crrontab before PATH as we wanted.

If we want to delete our entries from cron we should use state: absent keyword:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

[ansible@controlnode scripts]$ cat cron2.yml --- - hosts: all user: ansible become: yes gather_facts: no tasks: - name: Ensure a job that runs at 5am and 5pm exists. Creates an entry like "0 5,17 * * * df -h » /tmp/diskspace" cron: name: "Job 0001" minute: "0" hour: "5,17" job: "df -h » /tmp/diskspace" state: absent - name: Creates an entry like "PATH=/opt/bin" on top of crontab cron: name: PATH env: yes job: /opt/bin state: absent - name: Creates an entry like "APP_HOME=/srv/app" and insert it after PATH declaration cron: name: APP_HOME env: yes job: /srv/app insertbefore: PATH state: absent |

Run the playbook:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

[ansible@controlnode scripts]$ ansible-playbook cron2.yml PLAY [all] ************************************************************************************************************************************ TASK [Ensure a job that runs at 5am and 5pm exists. Creates an entry like "0 5,17 * * * df -h » /tmp/diskspace"] ****************************** changed: [localhost] changed: [managedhost1] changed: [managedhost2] TASK [Creates an entry like "PATH=/opt/bin" on top of crontab] ******************************************************************************** changed: [managedhost2] changed: [managedhost1] changed: [localhost] TASK [Creates an entry like "APP_HOME=/srv/app" and insert it after PATH declaration] ********************************************************* changed: [managedhost1] changed: [managedhost2] changed: [localhost] PLAY RECAP ************************************************************************************************************************************ localhost : ok=3 changed=3 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 managedhost1 : ok=3 changed=3 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 managedhost2 : ok=3 changed=3 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 |

Check the crontab:

|

1 |

[ansible@controlnode scripts]$ sudo crontab -l |

It is empty.

at module

at software (in Linux)

- The at command is used to schedule jobs that will be running once in the future.

- It is used for single ad-hoc jobs and is not meant as a replacement for cron

- It is part of a group of commands that get installed with the at software.

- The other commands are:

- at Executes commands at a specific time.

- atq Lists the users pending jobs.

- atrm Deletes a job by its job number.

- batch Executes the command depending on specific system load levels. A value must be specified.

- Only at and atrm are controlled via the at module.

Service Details

- It may not be on all systems, so verify its installation.

- On Red Hat or CentOS systems it is installed with a

yum install atcommand. - The service is controlled via the atd daemon.

Example of at playbook:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

[ansible@controlnode scripts]$ cat at.yml --- - hosts: all user: ansible become: yes gather_facts: no tasks: - name: install the at command for job scheduling action: yum name=at state=installed - name: Enable and Start service at if not started service: name: atd enabled: yes state: started |

Check and run the playbook:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

[ansible@controlnode scripts]$ ansible-playbook --syntax-check at.yml playbook: at.yml [ansible@controlnode scripts]$ ansible-playbook at.yml PLAY [all] **************************************************************************************************************************************************** TASK [install the at command for job scheduling] ************************************************************************************************************** ok: [managedhost2] ok: [managedhost1] ok: [localhost] TASK [Enable and Start service at if not started] ************************************************************************************************************* ok: [managedhost1] ok: [managedhost2] ok: [localhost] PLAY RECAP **************************************************************************************************************************************************** localhost : ok=2 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 managedhost1 : ok=2 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 managedhost2 : ok=2 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 |

Checck if at has been installed:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 |

[ansible@controlnode scripts]$ ansible all -m yum -a "name=at state=present" managedhost2 | SUCCESS => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "msg": "", "rc": 0, "results": [ "at-3.1.13-24.el7.x86_64 providing at is already installed" ] } managedhost1 | SUCCESS => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "msg": "", "rc": 0, "results": [ "at-3.1.13-24.el7.x86_64 providing at is already installed" ] } localhost | SUCCESS => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "msg": "", "rc": 0, "results": [ "at-3.1.13-24.el7.x86_64 providing at is already installed" ] } |

Let’s modify playbook to add at job:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

[ansible@controlnode scripts]$ cat at2.yml --- - hosts: all user: ansible become: yes gather_facts: no tasks: - name: install the at command for job scheduling action: yum name=at state=installed - name: Enable and Start service at if not started service: name: atd enabled: yes state: started - name: Schedule a command to execute in 20 minutes as the ansible user at: command: df -h > /tmp/diskspace count: 20 units: minutes |

Check and run the playbook:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

[ansible@controlnode scripts]$ ansible-playbook --syntax-check at2.yml playbook: at2.yml [ansible@controlnode scripts]$ ansible-playbook at2.yml PLAY [all] **************************************************************************************************************************************************** TASK [install the at command for job scheduling] ************************************************************************************************************** ok: [managedhost1] ok: [managedhost2] ok: [localhost] TASK [Enable and Start service at if not started] ************************************************************************************************************* ok: [managedhost1] ok: [managedhost2] ok: [localhost] TASK [Schedule a command to execute in 20 minutes as the ansible user] **************************************************************************************** changed: [managedhost2] changed: [managedhost1] changed: [localhost] PLAY RECAP **************************************************************************************************************************************************** localhost : ok=3 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 managedhost1 : ok=3 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 managedhost2 : ok=3 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 |

Check if the playbook works:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

[ansible@controlnode scripts]$ sudo atq 1 Mon May 25 20:25:00 2020 a root [ansible@controlnode scripts]$ sudo at -c 1 #!/bin/sh # atrun uid=0 gid=0 # mail ansible 0 umask 22 .....OUTPUT OMITTED....... cd /home/ansible || { echo 'Execution directory inaccessible' >&2 exit 1 } ${SHELL:-/bin/sh} << 'marcinDELIMITER0d442f6d' df -h > /tmp/diskspace |

We can disable at job from the playbook by state:absent statement:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

[ansible@controlnode scripts]$ cat at2.yml --- - hosts: all user: ansible become: yes gather_facts: no tasks: - name: install the at command for job scheduling action: yum name=at state=installed - name: Enable and Start service at if not started service: name: atd enabled: yes state: started - name: Schedule a command to execute in 20 minutes as the ansible user at: command: df -h > /tmp/diskspace count: 20 units: minutes state: absent |

Check if the job has been removed:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 |

[ansible@controlnode scripts]$ ansible-playbook --syntax-check at2.yml playbook: at2.yml [ansible@controlnode scripts]$ ansible-playbook at2.yml PLAY [all] **************************************************************************************************************************************************** TASK [install the at command for job scheduling] ************************************************************************************************************** ok: [managedhost1] ok: [localhost] ok: [managedhost2] TASK [Enable and Start service at if not started] ************************************************************************************************************* ok: [managedhost2] ok: [managedhost1] ok: [localhost] TASK [Schedule a command to execute in 20 minutes as the ansible user] **************************************************************************************** ok: [managedhost2] ok: [managedhost1] ok: [localhost] PLAY RECAP **************************************************************************************************************************************************** localhost : ok=3 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 managedhost1 : ok=3 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 managedhost2 : ok=3 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 [ansible@controlnode scripts]$ sudo atq [ansible@controlnode scripts]$ at -c 1 Cannot find jobid 1 |

Security

Ansible Security Tasks

- Ansible is very useful as a security tool.

- You can make security changes to many nodes at once.

- You can apply changes to help with easily securing nodes.

- You can check lots of nodes for vulnerabilities quickly.

- It can work well with other tools that you may have in place.

- Check for Ansible modules that can be used for security tasks.

- Not just for Linux can be used for OS X, Solaris, Windows, and others.

- Can be used for devices such as NetApp or EMC storage, F5, and others.

There are many modules that can be useful for security:

-

selinuxConfigures the SELinux mode and policy.firewalldiptables Both manage firewall policies.pamd— Manages PAM modules.

- Capable of working with Datadog, Nagios, and other monitoring tools.

- Manage users and groups (bulk add and delete users if you don’t have SSO ability).

- Can manage certificates such as OpenSSL or SSH.

- A colossal amount of other abilities exist. Search the All Modules page: https://docs.ansible.com/ansible/latest/modules/list_of_all_modules.html

Example of playbook with selinux module:

|

1 2 3 4 5 6 7 8 9 10 11 |

[ansible@controlnode scripts]$ cat selinux.yml --- - hosts: all user: ansible become: yes gather_facts: no tasks: - name: Enable SELinux selinux: policy: targeted state: enforcing |

Check and run the playbook:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

[ansible@controlnode scripts]$ ansible-playbook --syntax-check selinux.yml playbook: selinux.yml [ansible@controlnode scripts]$ ansible-playbook selinux.yml PLAY [all] **************************************************************************************************************************************************** TASK [Enable SELinux] ***************************************************************************************************************************************** ok: [managedhost2] ok: [managedhost1] ok: [localhost] PLAY RECAP **************************************************************************************************************************************************** localhost : ok=1 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 managedhost1 : ok=1 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 managedhost2 : ok=1 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 |

Nothing has been changed because selinux policy has already been set to enforcing.

Example of playbook with firewall module:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 |

[ansible@controlnode scripts]$ cat firewall.yml --- - hosts: all user: ansible become: yes gather_facts: no tasks: - name: install firewalld action: yum name=firewalld state=installed - name: Enable firewalld on system reboot service: name=firewalld enabled=yes - name: Start service firewalld, if not started service: name: firewalld state: started - firewalld: service: dhcpv6-client permanent: yes immediate: yes state: disabled - firewalld: service: http permanent: yes immediate: yes state: enabled - firewalld: service: ssh permanent: yes immediate: yes state: enabled |

Before we run the playbook let’s check which services are allowed on firewall at this moment:

|

1 2 3 4 5 6 7 |

[ansible@controlnode scripts]$ ansible all -b -a "/bin/firewall-cmd --list-services" managedhost2 | CHANGED | rc=0 >> dhcpv6-client http ssh managedhost1 | CHANGED | rc=0 >> dhcpv6-client http ssh localhost | CHANGED | rc=0 >> dhcpv6-client http ssh |

Check and run the playbook:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 |

[ansible@controlnode scripts]$ ansible-playbook --syntax-check firewall.yml playbook: firewall.yml [ansible@controlnode scripts]$ ansible-playbook firewall.yml PLAY [all] **************************************************************************************************************************************************** TASK [install firewalld] ************************************************************************************************************************************** ok: [managedhost2] ok: [managedhost1] ok: [localhost] TASK [Enable firewalld on system reboot] ********************************************************************************************************************** ok: [managedhost1] ok: [localhost] ok: [managedhost2] TASK [Start service firewalld, if not started] **************************************************************************************************************** ok: [managedhost2] ok: [managedhost1] ok: [localhost] TASK [firewalld] ********************************************************************************************************************************************** changed: [managedhost2] changed: [managedhost1] changed: [localhost] TASK [firewalld] ********************************************************************************************************************************************** ok: [managedhost1] ok: [managedhost2] ok: [localhost] TASK [firewalld] ********************************************************************************************************************************************** ok: [managedhost1] ok: [managedhost2] ok: [localhost] PLAY RECAP **************************************************************************************************************************************************** localhost : ok=6 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 managedhost1 : ok=6 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 managedhost2 : ok=6 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 |

Let’s which services are allowed on firewall now:

|

1 2 3 4 5 6 7 |

[ansible@controlnode scripts]$ ansible all -b -a "/bin/firewall-cmd --list-services" managedhost1 | CHANGED | rc=0 >> http ssh localhost | CHANGED | rc=0 >> http ssh managedhost2 | CHANGED | rc=0 >> http ssh |

Let’s add a group developers to the managed hosts:

|

1 2 3 4 5 6 7 8 9 10 11 |

[ansible@controlnode scripts]$ cat add-group.yml --- - hosts: all user: ansible become: yes gather_facts: no tasks: - name: Ensure group "developers" exists group: name: developers state: present |

Check and run the playbook:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

[ansible@controlnode scripts]$ ansible-playbook --syntax-check add-group.yml playbook: add-group.yml [ansible@controlnode scripts]$ ansible-playbook add-group.yml PLAY [all] ********************************************************************************************************************************************* TASK [Ensure group "developers" exists] **************************************************************************************************************** changed: [managedhost1] changed: [managedhost2] changed: [localhost] PLAY RECAP ********************************************************************************************************************************************* localhost : ok=1 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 managedhost1 : ok=1 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 managedhost2 : ok=1 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 |

To create a user we need a password hash:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

[ansible@controlnode scripts]$ sudo adduser tempuser [ansible@controlnode scripts]$ passwd adduser tempuser passwd: tylko root może podać nazwę konta. [ansible@controlnode scripts]$ sudo passwd tempuser Zmienianie hasła użytkownika tempuser. Nowe hasło : BŁĘDNE HASŁO: Hasło jest krótsze niż 8 znaków Proszę ponownie podać nowe hasło : passwd: zaktualizowanie wszystkich tokenów uwierzytelniania się powiodło. [ansible@controlnode scripts]$ sudo grep tempuser /etc/shadow tempuser:$6$INs/ECOm$uWFAk/HoNxHdPq.YPlPYLkvoUQb1Uou42dGwYgE7Yi7kglhh92pzLuH/8P4D.Pl/H3R8QDkh0VmfKla/4Q0640:18407:0:99999:7::: |

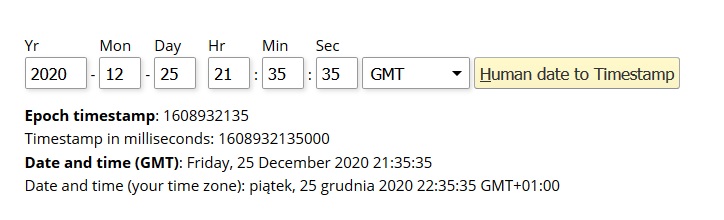

Besides the password hash we need expires date which we can get from www.epochconverter.com site.

The playbook looks like:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

[ansible@controlnode scripts]$ cat add-user.yml --- - hosts: all user: ansible become: yes gather_facts: no tasks: - name: Add a consultant whose account you want to expire user: name: james20 shell: /bin/bash groups: developers append: yes expires: REPLACE-WITH-EPOCH password: REPLACE-WITH-HASH |

The playbook with expires and password:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

[ansible@controlnode scripts]$ cat add-user.yml --- - hosts: all user: ansible become: yes gather_facts: no tasks: - name: Add a consultant whose account you want to expire user: name: james20 shell: /bin/bash groups: developers append: yes expires: 1608932135 password: $6$INs/ECOm$uWFAk/HoNxHdPq.YPlPYLkvoUQb1Uou42dGwYgE7Yi7kglhh92pzLuH/8P4D.Pl/H3R8QDkh0VmfKla/ |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

[ansible@controlnode scripts]$ ansible-playbook --syntax-check add-user.yml playbook: add-user.yml [ansible@controlnode scripts]$ ansible-playbook add-user.yml PLAY [all] **************************************************************************************************************************************** TASK [Add a consultant whose account you want to expire] ****************************************************************************************** [WARNING]: The input password appears not to have been hashed. The 'password' argument must be encrypted for this module to work properly. changed: [localhost] changed: [managedhost1] changed: [managedhost2] PLAY RECAP **************************************************************************************************************************************** localhost : ok=1 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 managedhost1 : ok=1 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 managedhost2 : ok=1 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 |

Let’s check if user james20 is added to system:

|

1 2 |

[ansible@controlnode scripts]$ sudo grep james20 /etc/passwd james20:x:1003:1004::/home/james20:/bin/bash |

To remove user from system we need to use state:absent statement:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

[ansible@controlnode scripts]$ cat add-user.yml --- - hosts: all user: ansible become: yes gather_facts: no tasks: - name: Add a consultant whose account you want to expire user: name: james20 shell: /bin/bash groups: developers append: yes expires: 1608932135 password: $6$INs/ECOm$uWFAk/HoNxHdPq.YPlPYLkvoUQb1Uou42dGwYgE7Yi7kglhh92pzLuH/8P4D.Pl/H3R8QDkh0VmfKla/ state: absent |

Run the playbook and check if the user is still prenent in the system:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

[ansible@controlnode scripts]$ ansible-playbook add-user.yml PLAY [all] **************************************************************************************************************************************** TASK [Add a consultant whose account you want to expire] ****************************************************************************************** [WARNING]: The input password appears not to have been hashed. The 'password' argument must be encrypted for this module to work properly. changed: [managedhost2] changed: [managedhost1] changed: [localhost] PLAY RECAP **************************************************************************************************************************************** localhost : ok=1 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 managedhost1 : ok=1 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 managedhost2 : ok=1 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 [ansible@controlnode scripts]$ sudo grep james20 /etc/passwd |