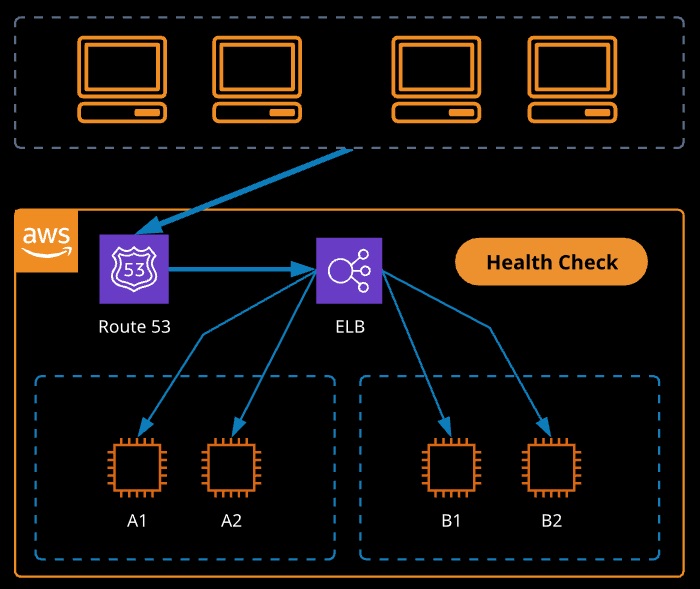

- Load balancing is a method used to distribute incoming connections across a group of servers or services.

- Incoming connections are made to the load balancer, which distributes them to associated services.

- Elastic Load Balancing (ELB) is a service that provides a set of highly available and scalable load balancers in one of three versions:

- Classic (CLB),

- Application (ALB)

- Network (NLB)

- ELBs can be paired with Auto Scaling groups to enhance high availability and fault tolerance — automating scaling/elasticity.

- An elastic load balancer has a DNS record, which allows access at the external side.

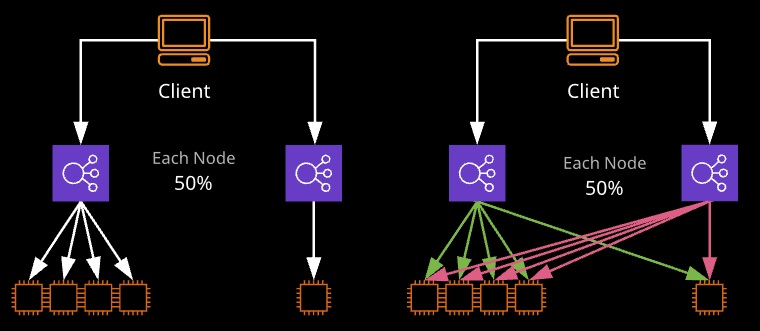

A node is placed in each AZ the load balancer is active in. Each node gets 1/N of the traffic, where N is the number of nodes. Historically, each node could only load balance to instances in the same AZ. This results in uneven traffic distribution. Cross-zone load balancing allows each node to distribute traffic to all instances.

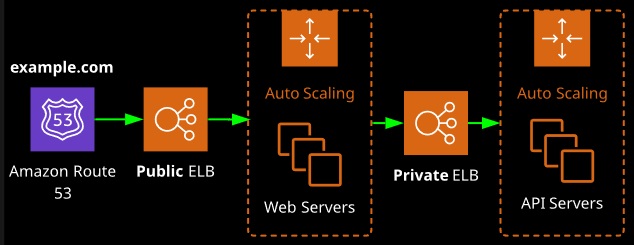

An elastic load balancer can be public facing, meaning it accepts traffic from the public Internet, or internal, which is only accessible from inside a VPC and is often used between application tiers.

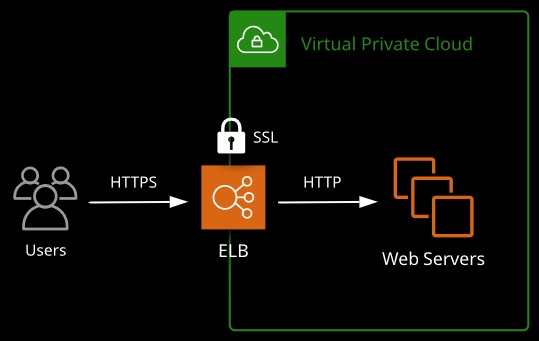

An elastic load balancer accepts traffic via listeners using protocol and ports. It can strip HTTPS at this point, meaning it handles encryption/decryption, reducing CPU usage on instances.

Application Load Balancer

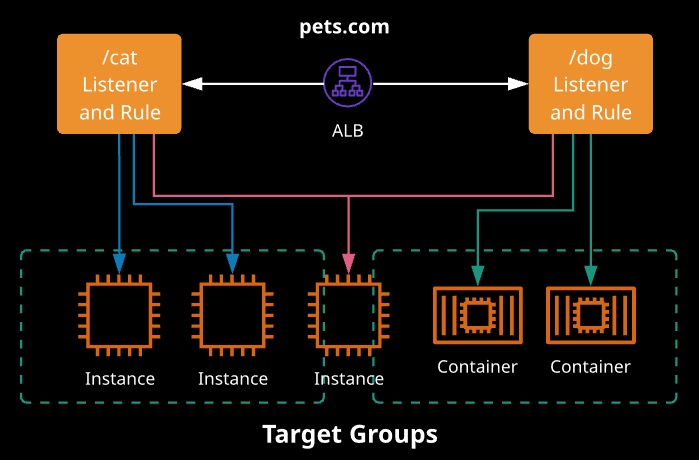

- Application Load Balancers (ALBs) operate at Layer 7 of the OSI model. They understand HTTP and HTTPS and can load balance based on this protocol layer.

- ALBs are now recommended as the default LB for VPCs. They perform better than CLBs and are almost always cheaper.

- Content rules can direct certain traffic to specific target groups.

- Host-based rules: Route traffic based on the host used ,

- Path-based rules: Route traffic based on URL path

- ALBs support EC2, ECS, EKS, Lambda, HTTPS, HTTP/2 and WebSockets, and they can be integrated with AWS Web Application Firewall (WAF).

- Use an ALB if you need to use containers or microservices.

- Targets -> Target Groups -> Content Rules

- An ALB can host multiple SSL certificates using SNI.

1. Three components

-

- Load Balancer

- Receives client requests (HTTP, HTTPS)

- Listeners

- Reads the requests from clients

- Compares the request to rules, then forwards to a target group

- Target Group

- Receives forwards from listeners

- Health checks are configured per target group

- Targets can be in multiple target groups

- Load Balancer

2. Works at the Application layer (7)

3. Content-based routing

-

- Path-based routing: Forwards based on the URL in the request; /dev and /prod can route to different target groups

- Host-based routing: Forwards based on the host field of the HTTP header; dev.mysite.com and prod.mysite.com can route to different target groups

4. Routes to IP addresses, including outside the VPC (on-premises)

5. Routes to microservices (allows dynamic port mapping)

Monitoring

- CloudWatch metrics:

ActiveConnectionCount,HealthyHostCount,HTTP code totals, and more - Access logs: Sends detailed request information to S3

- Request tracing: A header is added that includes a trace identifier for requests

- CloudTrail logs: Records API activity

Network Load Balancer

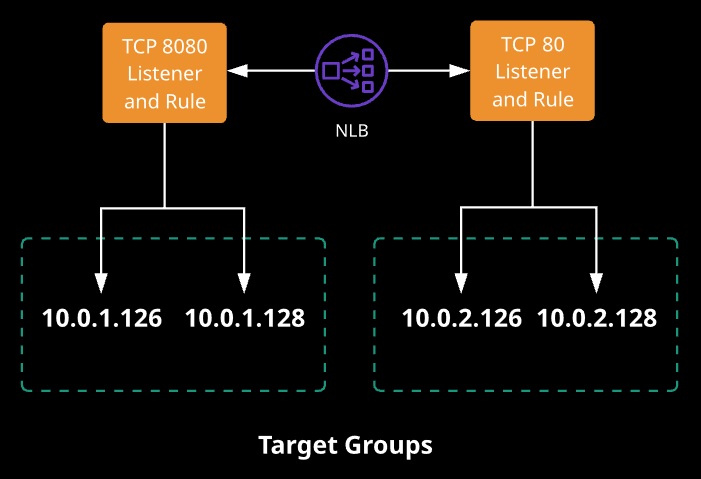

Network Load Balancers (NLBs) are the newest type of load balancer and operate at Layer 4 of the OSI network model. There are a few scenarios and benefits to using an NLB versus an ALB:

- Can support protocols other than HTTP/S because it forwards upper layers unchanged

- Less latency because no processing above Layer 4 is required

- IP addressable — static address

- Best load balancing performance within AWS

- Source IP address preservation — packets unchanged

- Targets can be addressed using IP address

1. Same three components (as ALB)

-

- Load Balancer

- Receives client requests

- Listeners

- Reads the requests from clients

- Compares the request to rules, then forwards to a target group

- Target Group

- Uses TCP protocol and port to route requests to targets (EC2, on-premises)

- Health checks are configured per target group

- Targets can be in multiple target groups

- Load Balancer

2. Functions at the Transport layer (4)

3. Millions of connections capability (no pre-warming needed) Each

4. Availability Zone assigned gets a node created in it with a static IP (or EIP)

-

- Reduces latency

5. Register targets by:

-

- Instance ID: Source addresses of clients are preserved

- IP address: Source addresses of clients are the private IP of NLB node

6. Client TCP connections have different source port and sequence numbers

-

- Route traffic to different targets

7. Change targets anytime

Monitoring

- CloudWatch metrics:

ActiveFlowCount,HealthyHostCount,UnhealthyHostCount, and more - VPC Flow Logs: Detailed log of traffic going to and from your NLB

- CloudTrail logs: Records API activity

Classic Load Balancer

Classic Load Balancers are the oldest type of load balancer and generally should be avoided for new projects.

- Support Layer 3 & 4 (TCP and SSL) and some HTTP/S features

- It isn’t a Layer 7 device, so no real HTTP/S

- One SSL certificate per CLB — can get expensive for complex projects

- Can offload SSL connections — HTTPS to the load balancer and HTTP to the instance (lower CPU and admin overhead on instances)

- Can be associated with Auto Scaling groups

- DNS A Record is used to connect to the CLB

1. A simple, no-frills load balancer

2. Supports EC2-Classic

3. Supports HTTP, HTTPS, TCP, and SSL listeners

4. Cross-zone load balancing

-

- Enable to evenly distribute traffic to all registered instances

- Recommended to keep roughly the same number in each AZ

LB Health Checks

Health checks can be configured to check the health of any attached services. If a problem is detected, incoming connections won’t be routed to instances until it returns to health.

CLB health checks can be TCP, HTTP, HTTPS, and SSL based on ports 1-65535. With HTTP/S checks, a HTTP/S path can be tested.

Monitoring

- CloudWatch metrics:

HealthyHostCount,RequestCount,Latency,HTTPcodes, and more - Access logs: Sends request information to S3

- CloudTrail logs: Records API activity

High Availability

We can have both external and internal load balancers.

External load balancers are public facing:

- Often used to distribute load between web servers

- Provide a public DNS hostname

Internal load balancers are not customer facing:

- Often used to distribute load between private back-end servers

- Provide an internal DNS hostname

Sticky Sessions

- Maintains a user’s session state by ensuring they are routed to the same target

- Uses cookies to identify sessions. Clients must support them.

- Enabled on the target group (Application and Network Load Balancers)

- Enabled on the Classic Load Balancer itself after creation

SSL Offloading

- In a highly available web application, we use load balancers to distribute traffic.

- We can also use their elasticity and scalability in the HTTPS/SSL process.

- Encryption and decryption require processing: We can save processing on our instances by transferring the SSL process to the load balancer.

- There is no need for every EC2 instance to need a certificate and process encryption and decryption: Application performance should increase.

- Certificate Manager also integrates for certificate generation and management.

Launch templates and launch configurations allow you to configure various configuration attributes that can be used to launch EC2 instances. Typical configurations that can be set include:

- AMI to use for EC2 launch

- Instance type

- Storage

- Key pair IAM role

- User data

- Purchase options

- Network configuration

- Security group(s)

Launch templates address some of the weaknesses of the legacy launch configurations and add the following features:

- Versioning and inheritance

- Tagging

- More advanced purchasing options

- New instance features, including:

- Elastic graphics

- T2/T3 unlimited settings

- Placement groups

- Capacity reservations

- Tenancy options

Launch templates should be used over launch configurations where possible. Neither can be edited after creation — a new version of the template or a new launch configuration should be created.

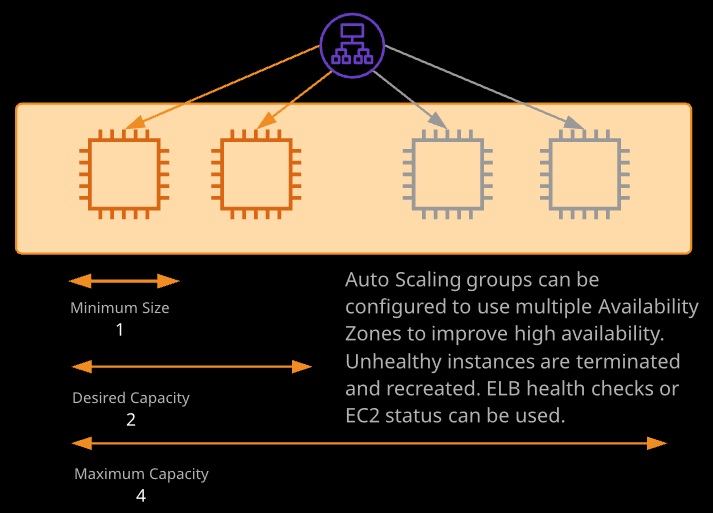

Auto Scaling groups use launch configurations or launch templates and allow automatic scale-out or scale-in based on configurable metrics. Auto Scaling groups are often paired with elastic load balancers.

Metrics such as CPU utilization or network transfer can be used either to scale out or scale in using scaling policies. Scaling can be manual, scheduled, or dynamic. Cooldowns can be defined to ensure rapid in/out events don’t occur.

Scaling policies can be simple, step scaling, or target tracking.