DependsOn

DependsOn is a way to say that some resource can’t be created before other resource is created. Consider a template::

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 |

AWSTemplateFormatVersion: '2010-09-09' Mappings: AWSRegionArch2AMI: us-east-1: HVM64: ami-6869aa05 us-west-2: HVM64: ami-7172b611 us-west-1: HVM64: ami-31490d51 eu-west-1: HVM64: ami-f9dd458a eu-central-1: HVM64: ami-ea26ce85 ap-northeast-1: HVM64: ami-374db956 ap-northeast-2: HVM64: ami-2b408b45 ap-southeast-1: HVM64: ami-a59b49c6 ap-southeast-2: HVM64: ami-dc361ebf ap-south-1: HVM64: ami-ffbdd790 us-east-2: HVM64: ami-f6035893 sa-east-1: HVM64: ami-6dd04501 cn-north-1: HVM64: ami-8e6aa0e3 Resources: Ec2Instance: Type: AWS::EC2::Instance Properties: ImageId: !FindInMap [AWSRegionArch2AMI, !Ref 'AWS::Region', HVM64] DependsOn: MyDB MyDB: Type: AWS::RDS::DBInstance Properties: AllocatedStorage: '5' DBInstanceClass: db.t2.micro Engine: MySQL EngineVersion: "5.7.22" MasterUsername: MyName MasterUserPassword: MyPassword # Readme - note: Added a DeletionPolicy (not shown in the video) # This ensures that the RDS DBInstance does not create a snapshot when it's deleted # Otherwise, you would be billed for the snapshot :) DeletionPolicy: Delete |

EC2 instance will not be created until MyDB resource is successfully created.

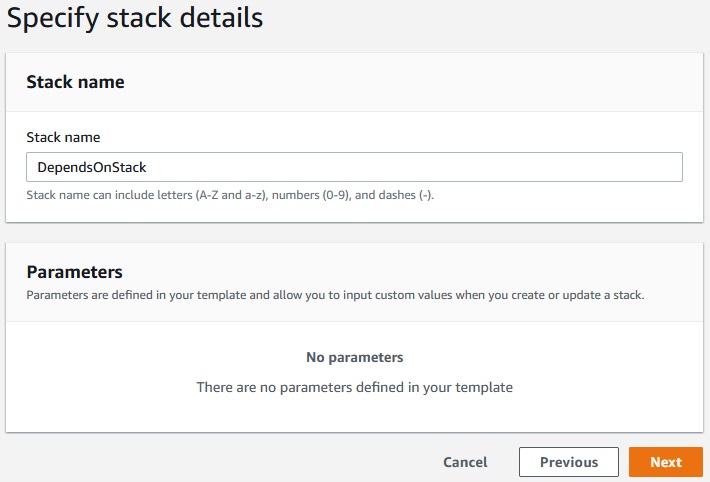

Let’s run the template:

CloudFormation -> Stacks -> Create stack

Next -> Next -> Create Stack

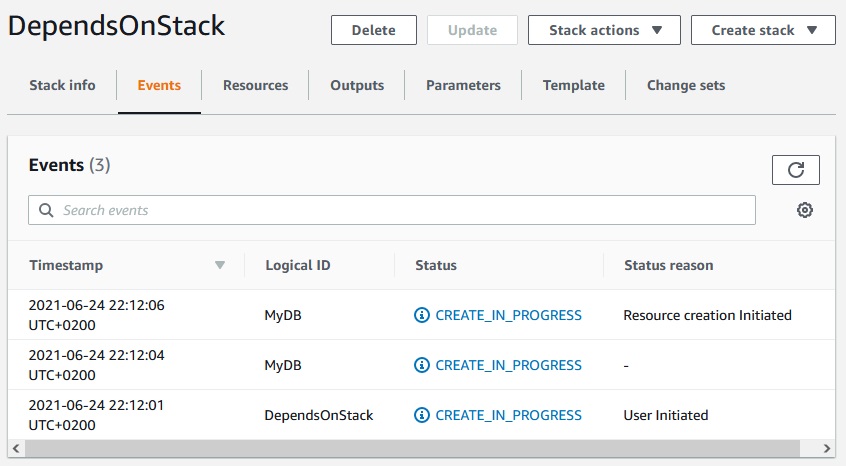

As we see the MyDB is beeing created as a first resource in the stack:

Deploying Lambda Functions

First way of defining a lambda function in CloudFormation is Inline.

Consider such a template:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 |

Resources: LambdaExecutionRole: Type: AWS::IAM::Role Properties: AssumeRolePolicyDocument: Version: '2012-10-17' Statement: - Effect: Allow Principal: Service: - lambda.amazonaws.com Action: - sts:AssumeRole Path: "/" Policies: - PolicyName: root PolicyDocument: Version: '2012-10-17' Statement: - Effect: Allow Action: - "s3:*" Resource: "*" - Effect: Allow Action: - "logs:CreateLogGroup" - "logs:CreateLogStream" - "logs:PutLogEvents" Resource: "*" ListBucketsS3Lambda: Type: "AWS::Lambda::Function" Properties: Handler: "index.handler" Role: Fn::GetAtt: - "LambdaExecutionRole" - "Arn" Runtime: "python3.7" Code: ZipFile: | import boto3 # Create an S3 client s3 = boto3.client('s3') def handler(event, context): # Call S3 to list current buckets response = s3.list_buckets() # Get a list of all bucket names from the response buckets = [bucket['Name'] for bucket in response['Buckets']] # Print out the bucket list print("Bucket List: %s" % buckets) return buckets |

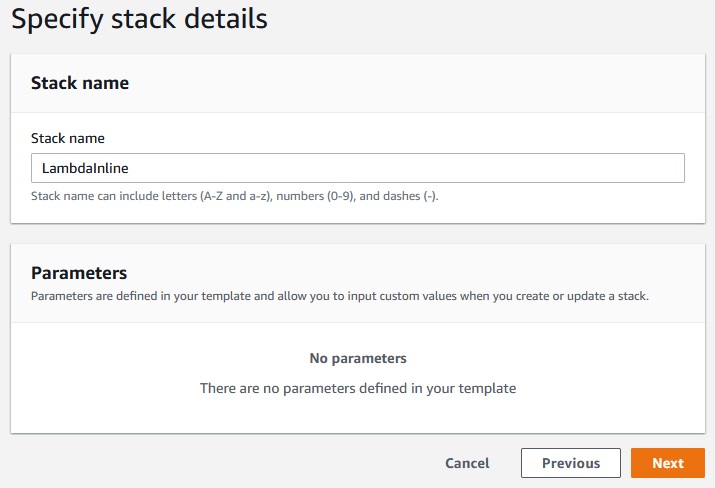

Let’s run the template:

CloudFormation -> Stacks -> Create stack

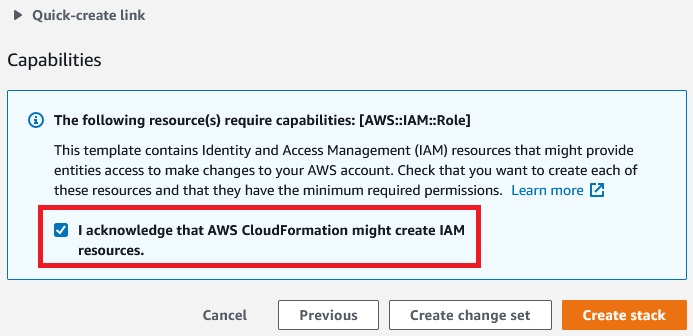

Next -> Next -> Create stack -> Requires capabilities : [CAPABILITY_IAM] -> Review LambdaInline

Create stack ->

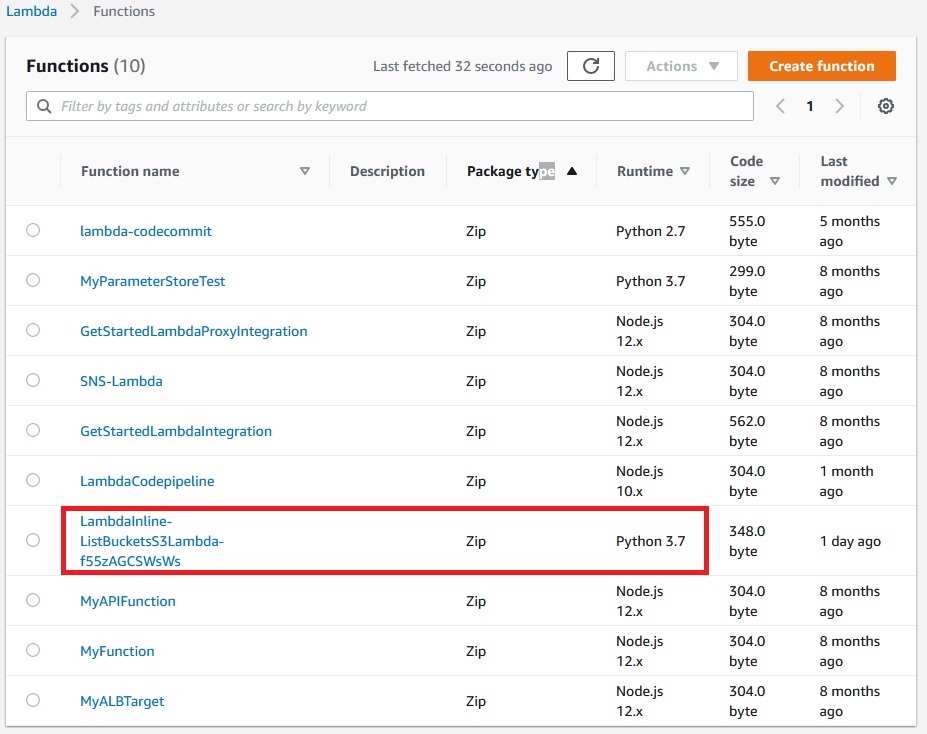

As we see the lambda function has been created:

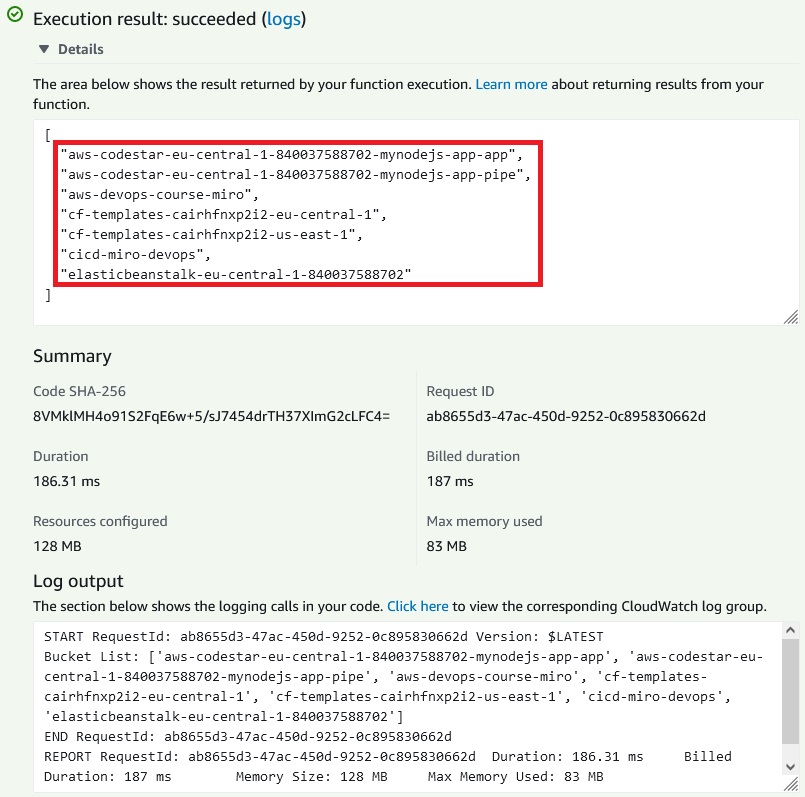

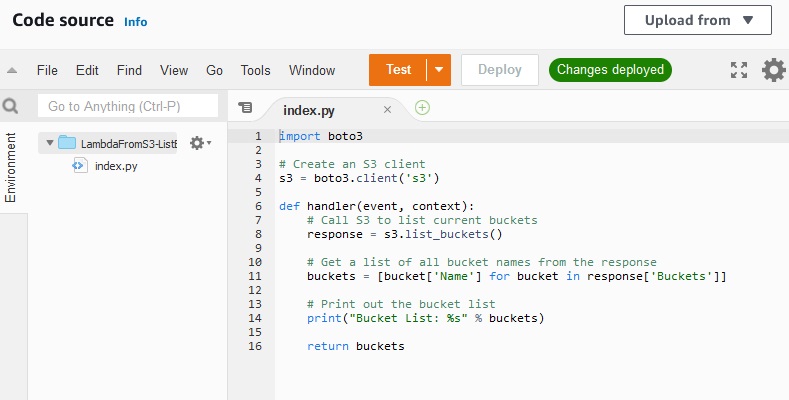

Let’s test the lambda function:

Lambda function simply list all s3 buckets which we have in AWS account.

A second way of defining a lambda function in CloudFormation is to use a zip from S3.

First we need to zip an index.py file:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

import boto3 # Create an S3 client s3 = boto3.client('s3') def handler(event, context): # Call S3 to list current buckets response = s3.list_buckets() # Get a list of all bucket names from the response buckets = [bucket['Name'] for bucket in response['Buckets']] # Print out the bucket list print("Bucket List: %s" % buckets) return buckets |

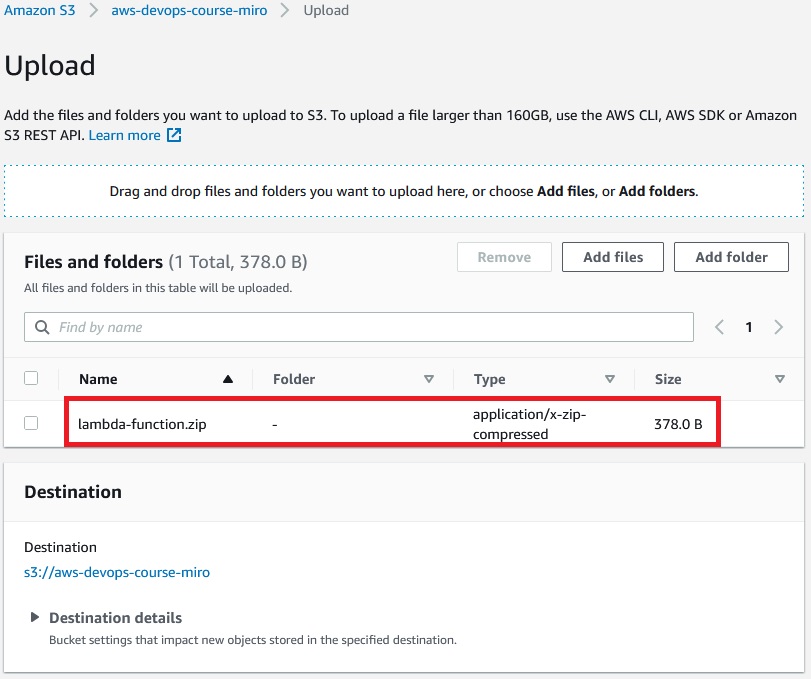

And then upload the zip file to the S3 service.

Upload->

In CloudFormation template file we need to reference to the uploaded zip file:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 |

Parameters: S3BucketParam: Type: String S3KeyParam: Type: String Resources: LambdaExecutionRole: Type: AWS::IAM::Role Properties: AssumeRolePolicyDocument: Version: '2012-10-17' Statement: - Effect: Allow Principal: Service: - lambda.amazonaws.com Action: - sts:AssumeRole Path: "/" Policies: - PolicyName: root PolicyDocument: Version: '2012-10-17' Statement: - Effect: Allow Action: - "s3:*" Resource: "*" - Effect: Allow Action: - "logs:CreateLogGroup" - "logs:CreateLogStream" - "logs:PutLogEvents" Resource: "*" ListBucketsS3Lambda: Type: "AWS::Lambda::Function" Properties: Handler: "index.handler" Role: Fn::GetAtt: - "LambdaExecutionRole" - "Arn" Runtime: "python3.7" Code: S3Bucket: !Ref S3BucketParam S3Key: !Ref S3KeyParam |

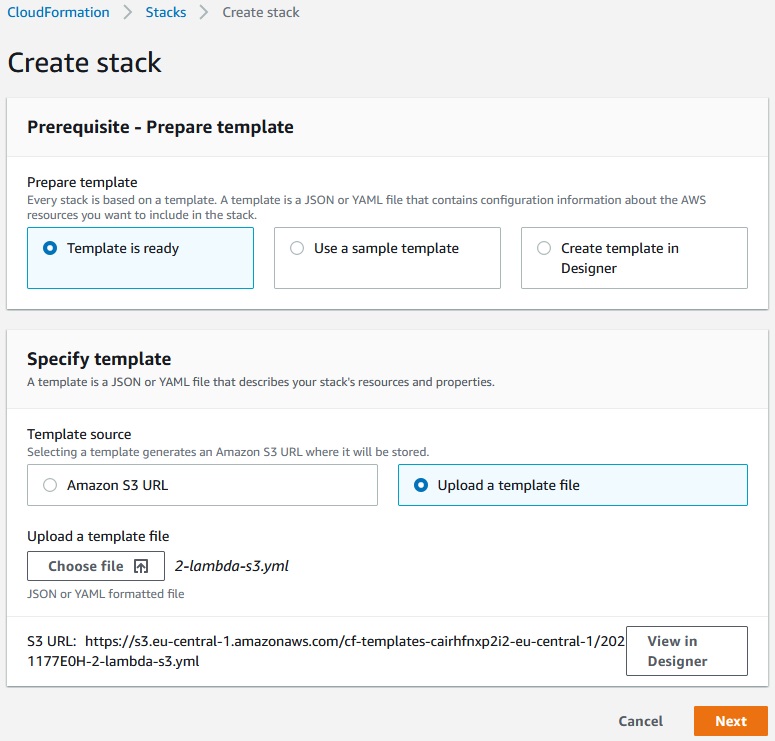

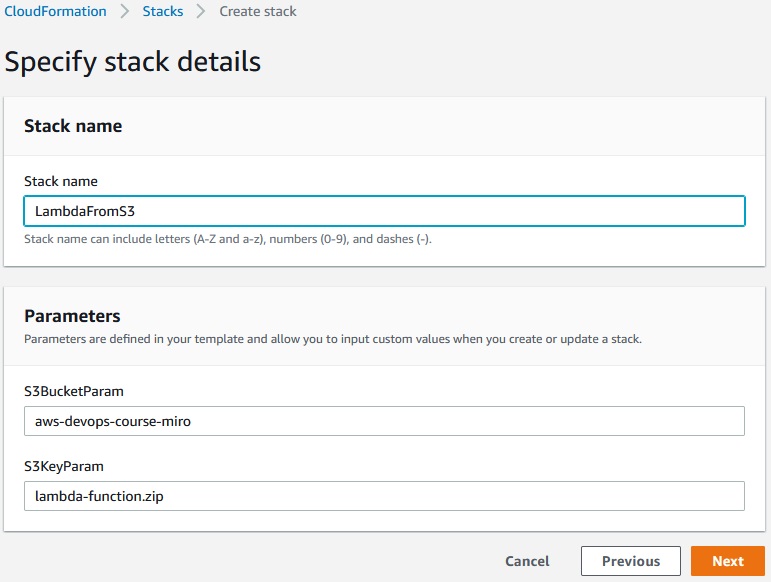

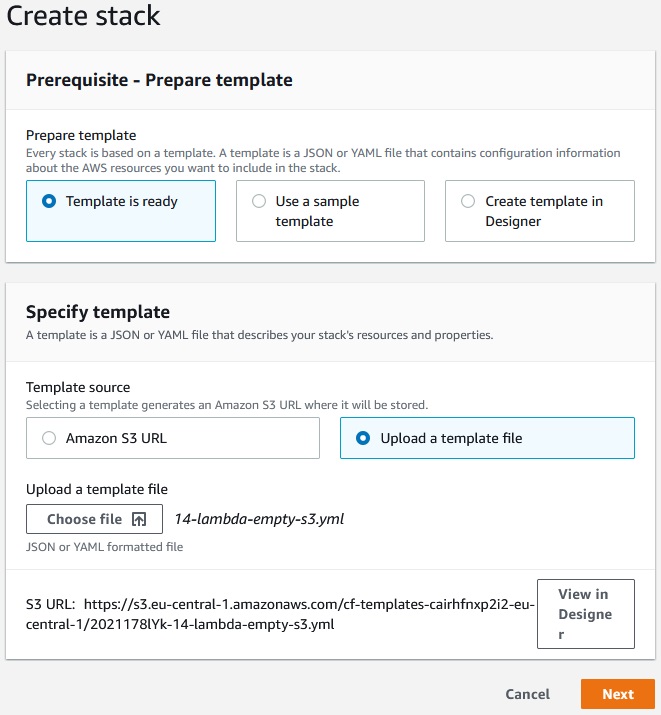

Now let’s create a stack:

Next ->

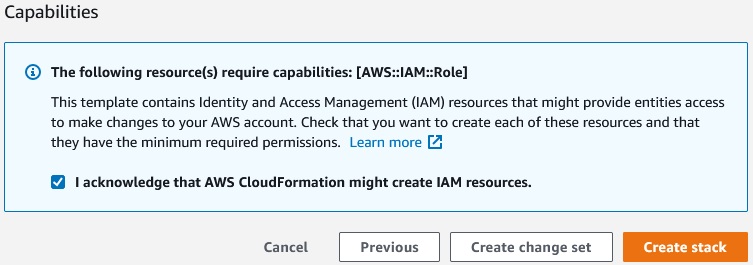

Next -> Next ->

Create stack ->

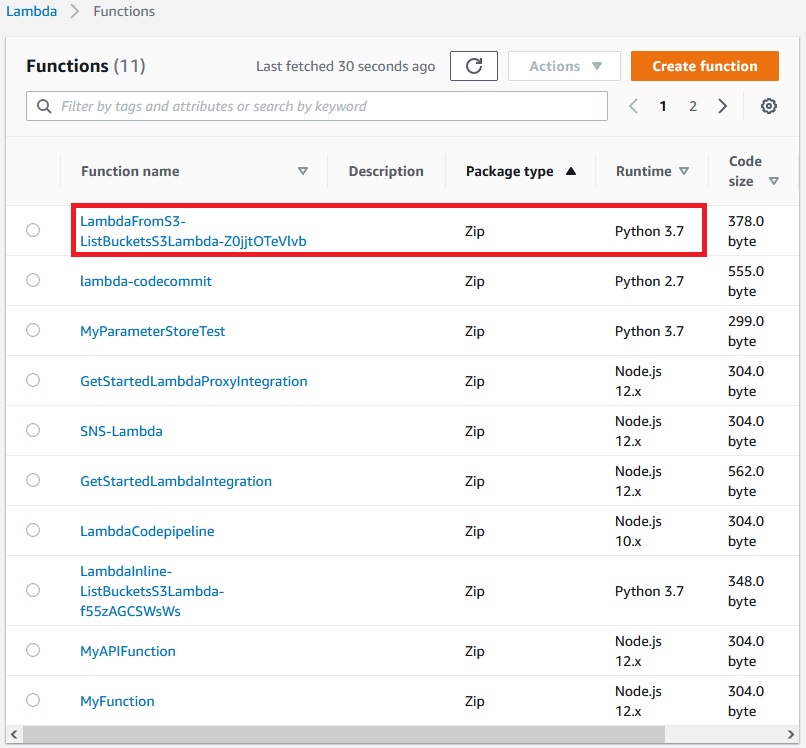

A new lambda function has been created:

The code of new function is the same as old code because CloudFormation retrieved zip file and unziped it.

What if we want to upload a new version of lambda-function.zip. We can change S3Bucket: !Ref S3BucketParam or S3Key: !Ref S3KeyParam but better way is to use a template with versioning:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 |

Parameters: S3BucketParam: Type: String S3KeyParam: Type: String S3ObjectVersionParam: Type: String Resources: LambdaExecutionRole: Type: AWS::IAM::Role Properties: AssumeRolePolicyDocument: Version: '2012-10-17' Statement: - Effect: Allow Principal: Service: - lambda.amazonaws.com Action: - sts:AssumeRole Path: "/" Policies: - PolicyName: root PolicyDocument: Version: '2012-10-17' Statement: - Effect: Allow Action: - "s3:*" Resource: "*" - Effect: Allow Action: - "logs:CreateLogGroup" - "logs:CreateLogStream" - "logs:PutLogEvents" Resource: "*" ListBucketsS3Lambda: Type: "AWS::Lambda::Function" Properties: Handler: "index.handler" Role: Fn::GetAtt: - "LambdaExecutionRole" - "Arn" Runtime: "python3.7" Code: S3Bucket: Ref: S3BucketParam S3Key: Ref: S3KeyParam S3ObjectVersion: Ref: S3ObjectVersionParam |

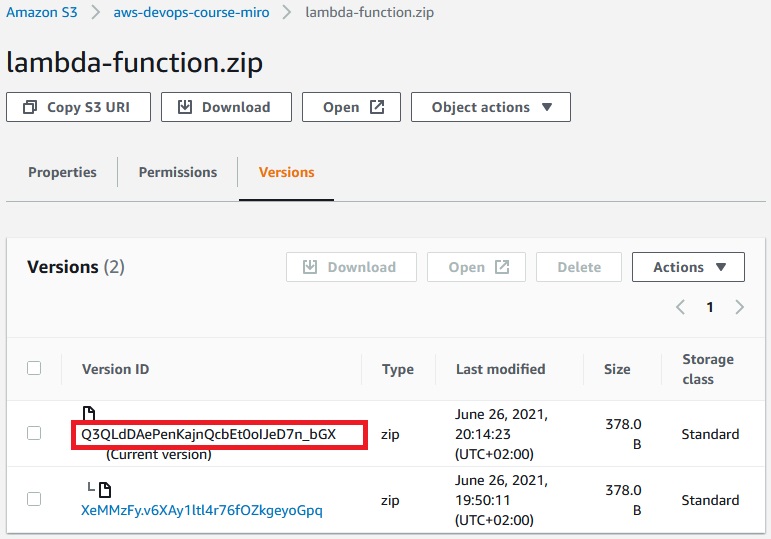

Let’s upload the same lambda-function.zip file to the S3 bucket and check the version-id:

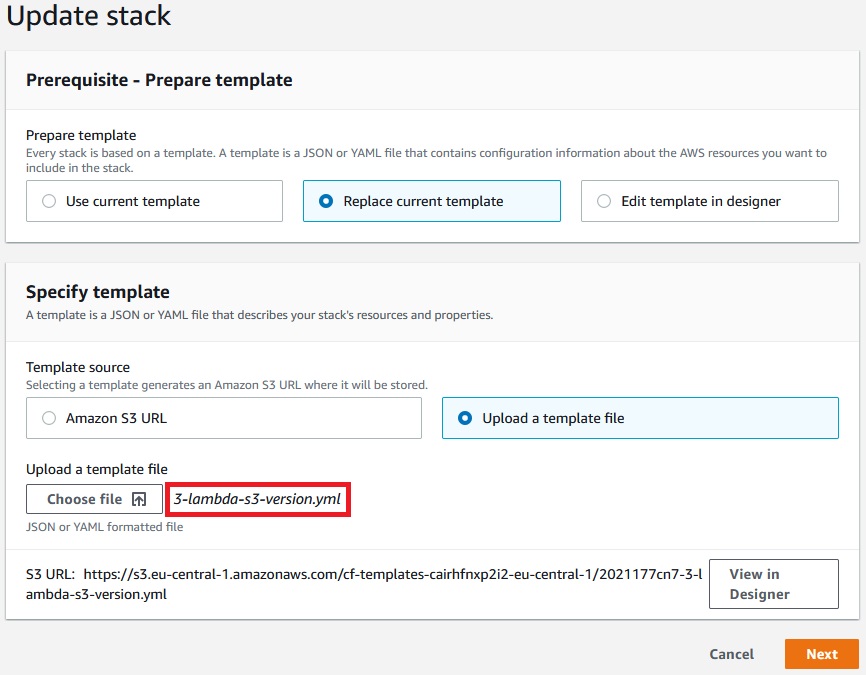

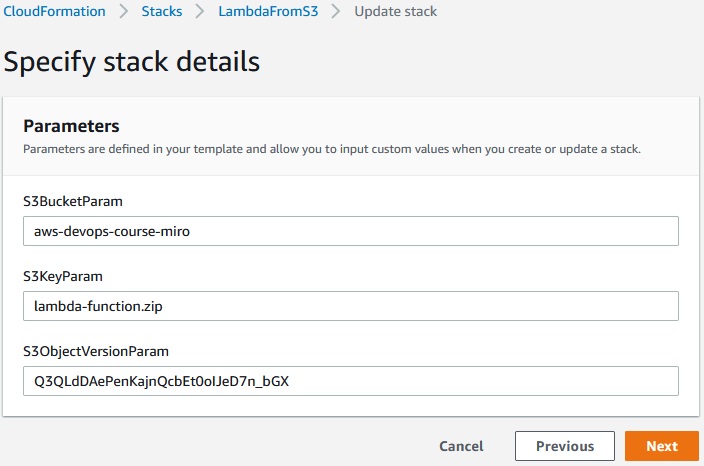

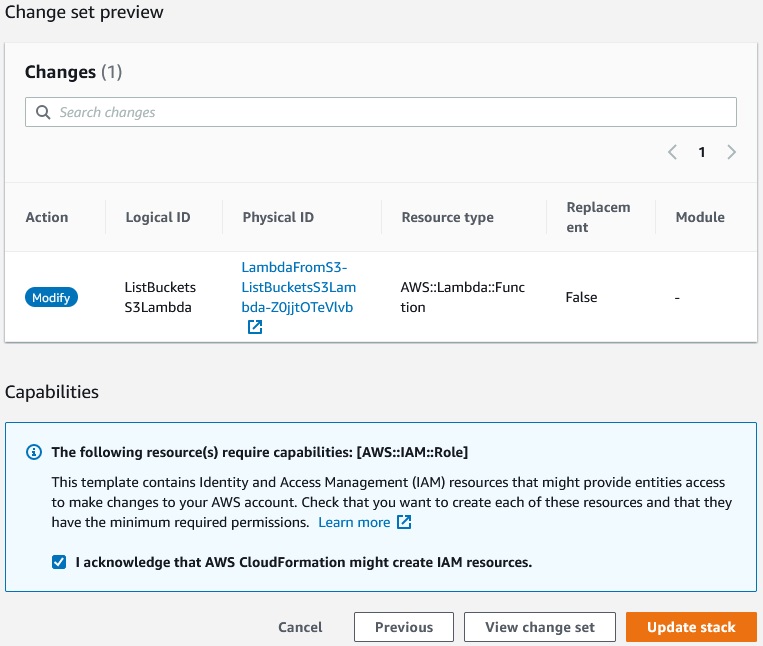

Now let’s update the stack:

Next ->

Next ->

Update stack ->

Custom Resources

You can define a Custom Resource in CloudFormation to address any of these use cases:

- An AWS resource is yet not covered (new service for example)

- An On-Premise resource

- Emptying an S3 bucket before being deleted

- Fetch an AMI id

- Anything you want…!

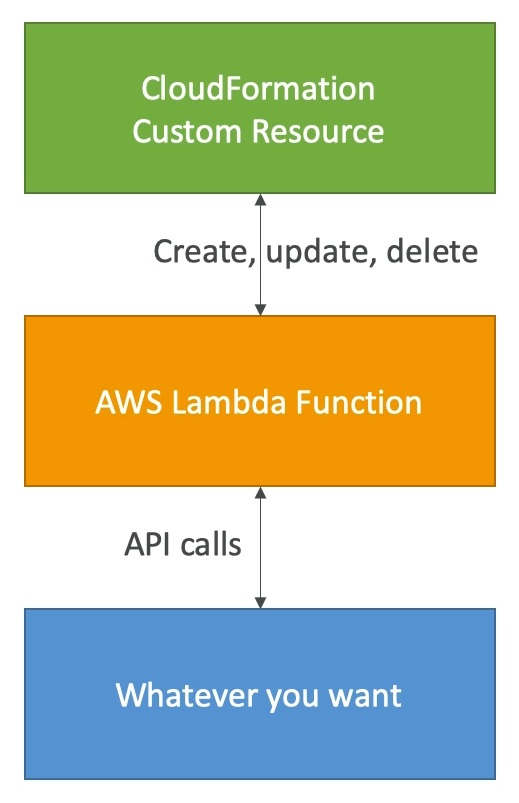

CloudFormation Custom Resources (Lambda)

- The Lambda Function will get invoked only if there is a Create, Update or Delete event, not every time you run the template

|

1 2 3 4 5 6 7 8 9 10 11 12 |

{ "RequestType" : "Create", "ResponseURL" : "http://pre—signed—S3—url—for—response", "StackId" : "arn:aws:cloudformation:us—west-2:123456789012:stack/stack—name/guid", "RequestId" : "unique id for this create request", "ResourceType" : "Custom::TestResource", "LogicalResourceId" : "MyTestResource", "ResourceProperties" : { "Name" : "Value", "List" : [ "1", "2", "3" ] } } |

Consider a yaml tamplate, this is an inline method:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 |

Resources: LambdaExecutionRole: Type: AWS::IAM::Role Properties: AssumeRolePolicyDocument: Version: '2012-10-17' Statement: - Effect: Allow Principal: Service: - lambda.amazonaws.com Action: - sts:AssumeRole Path: "/" Policies: - PolicyName: root PolicyDocument: Version: '2012-10-17' Statement: - Effect: Allow Action: - "s3:*" Resource: "*" - Effect: Allow Action: - "logs:CreateLogGroup" - "logs:CreateLogStream" - "logs:PutLogEvents" Resource: "*" EmptyS3BucketLambda: Type: "AWS::Lambda::Function" Properties: Handler: "index.handler" Role: Fn::GetAtt: - "LambdaExecutionRole" - "Arn" Runtime: "python3.7" # we give the function a large timeout # so we can wait for the bucket to be empty Timeout: 600 Code: ZipFile: | #!/usr/bin/env python # -*- coding: utf-8 -*- import json import boto3 from botocore.vendored import requests def handler(event, context): try: bucket = event['ResourceProperties']['BucketName'] if event['RequestType'] == 'Delete': s3 = boto3.resource('s3') bucket = s3.Bucket(bucket) for obj in bucket.objects.filter(): s3.Object(bucket.name, obj.key).delete() sendResponseCfn(event, context, "SUCCESS") except Exception as e: print(e) sendResponseCfn(event, context, "FAILED") def sendResponseCfn(event, context, responseStatus): response_body = {'Status': responseStatus, 'Reason': 'Log stream name: ' + context.log_stream_name, 'PhysicalResourceId': context.log_stream_name, 'StackId': event['StackId'], 'RequestId': event['RequestId'], 'LogicalResourceId': event['LogicalResourceId'], 'Data': json.loads("{}")} requests.put(event['ResponseURL'], data=json.dumps(response_body)) Outputs: StackSSHSecurityGroup: Description: The ARN of the Lambda function that empties an S3 bucket Value: !GetAtt EmptyS3BucketLambda.Arn Export: Name: EmptyS3BucketLambda |

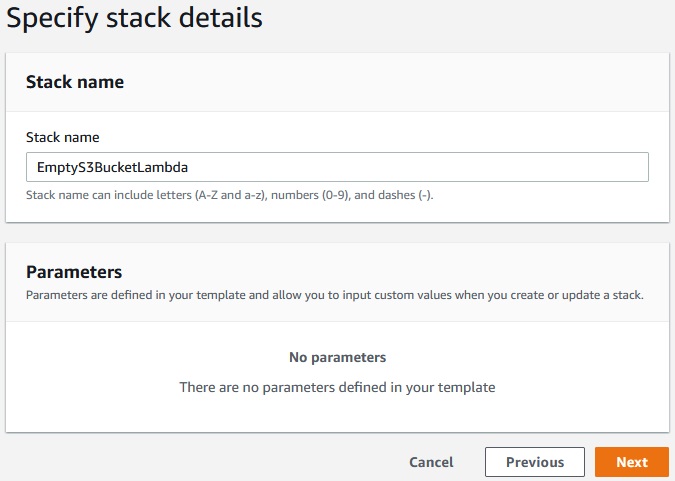

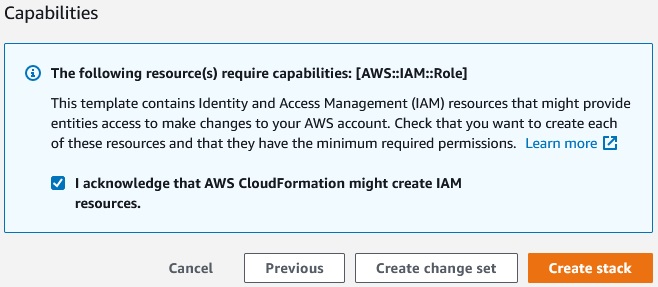

Let’s create a stack:

Next ->

Next ->

Create stack ->

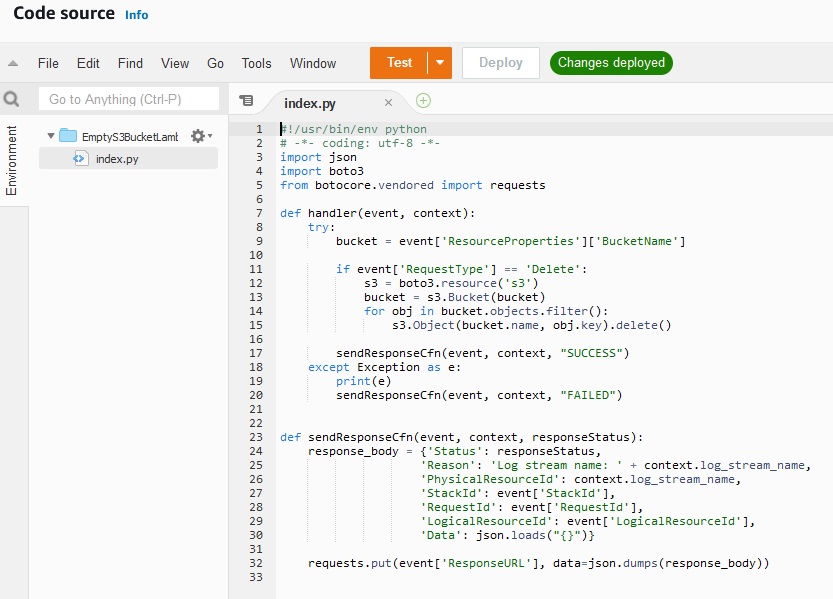

Labda funcion has been created:

Consider a yaml template with custom resource:

|

1 2 3 4 5 6 7 8 9 10 11 12 |

--- AWSTemplateFormatVersion: '2010-09-09' Resources: myBucketResource: Type: AWS::S3::Bucket LambdaUsedToCleanUp: Type: Custom::cleanupbucket Properties: ServiceToken: !ImportValue EmptyS3BucketLambda BucketName: !Ref myBucketResource |

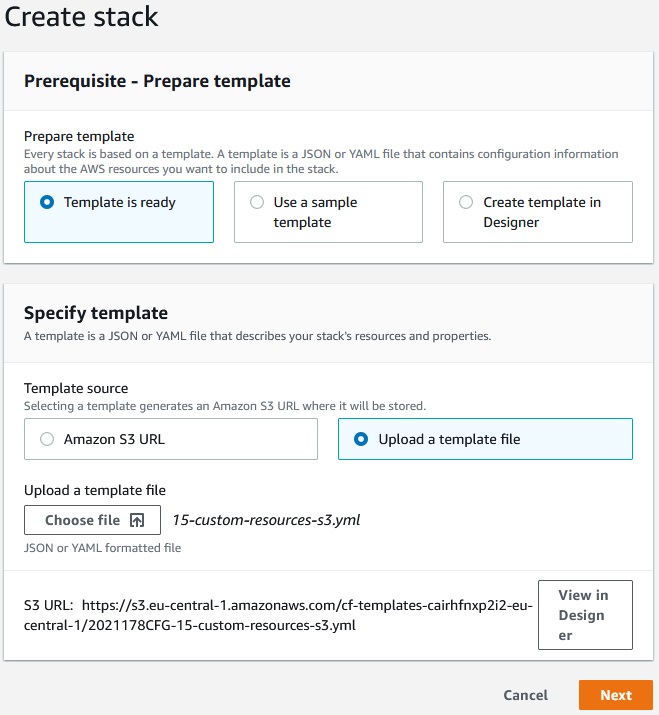

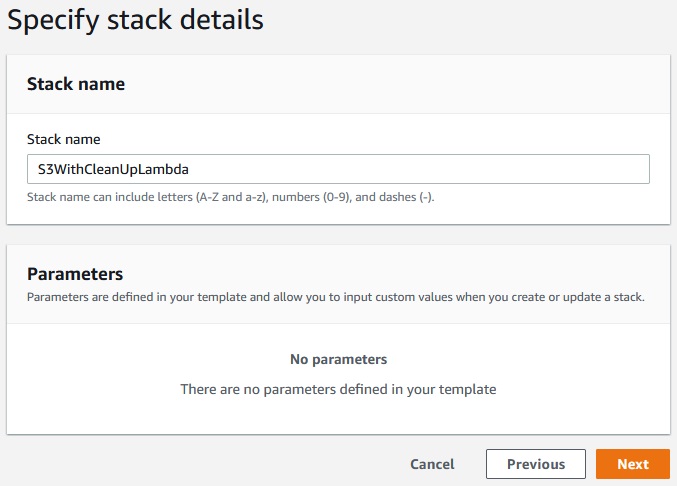

Now let’s create a stack with custom recource:

Next ->

Next -> Create stack

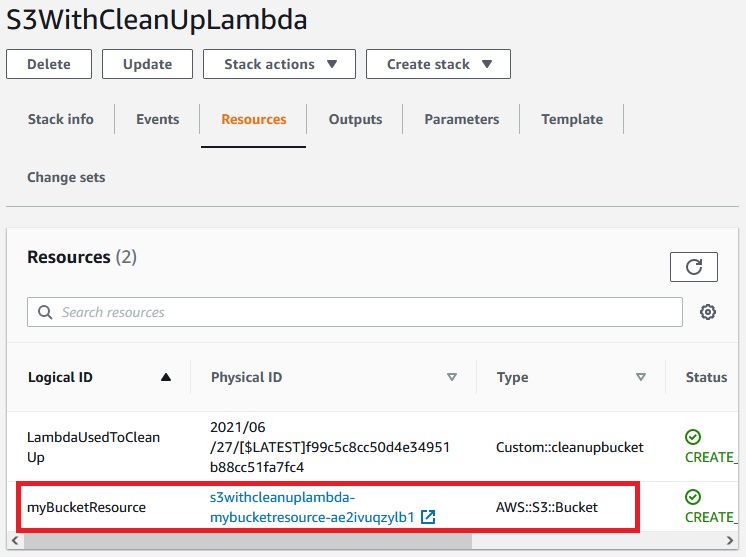

After th new stack has been created we can go to the rosurces and see that S3 bucket has been created also:

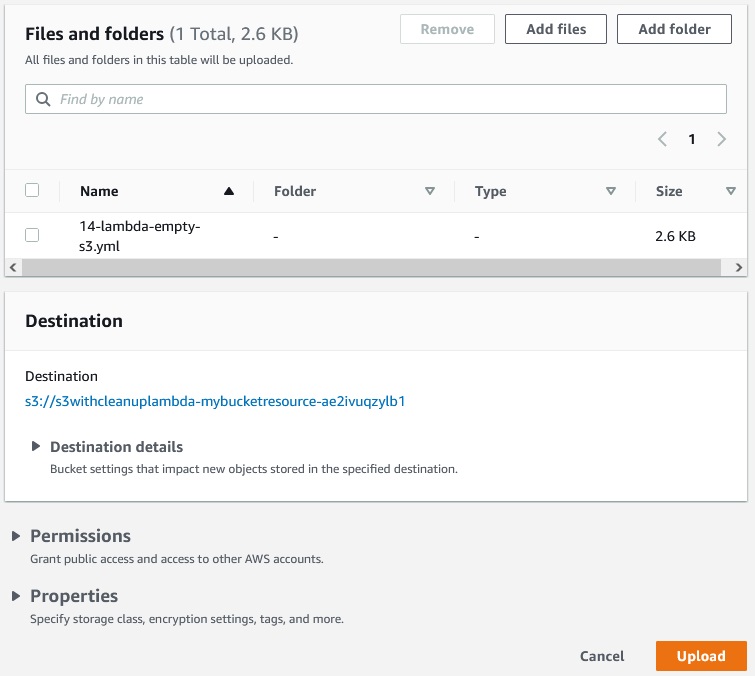

We can go to this 33 bucket and upload a random file here:

Upload ->

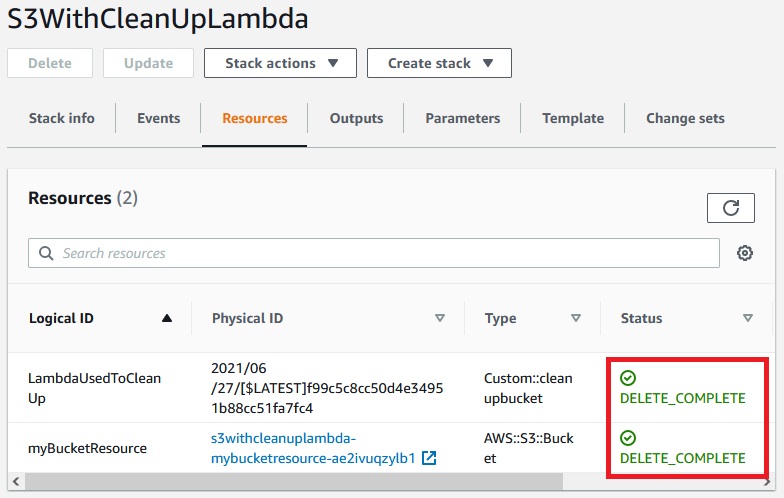

Now let’s delete the stack.

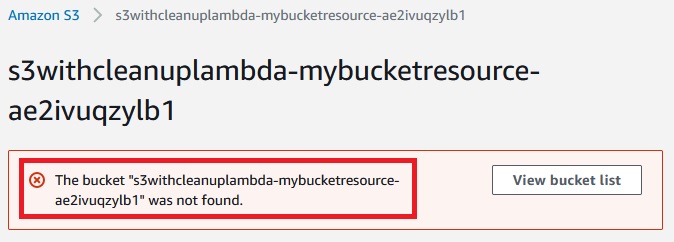

When the stack has been deleted the lambda funcion cleaned up the S3 Bucket.